Robust Physical-World Attacks on Deep Learning Visual Classification

Authors: Kevin Eykholt, Ivan Evtimov, Earlence Fernandes, Bo Li, Amir Rahmati, Chaowei Xiao, Atul Prakash, Tadayoshi Kohno, and Dawn Song.

Presentation by: George Hendrick

Blog post by: Obiora Odugu

Link to Paper: Read the Paper

Summary of the Paper

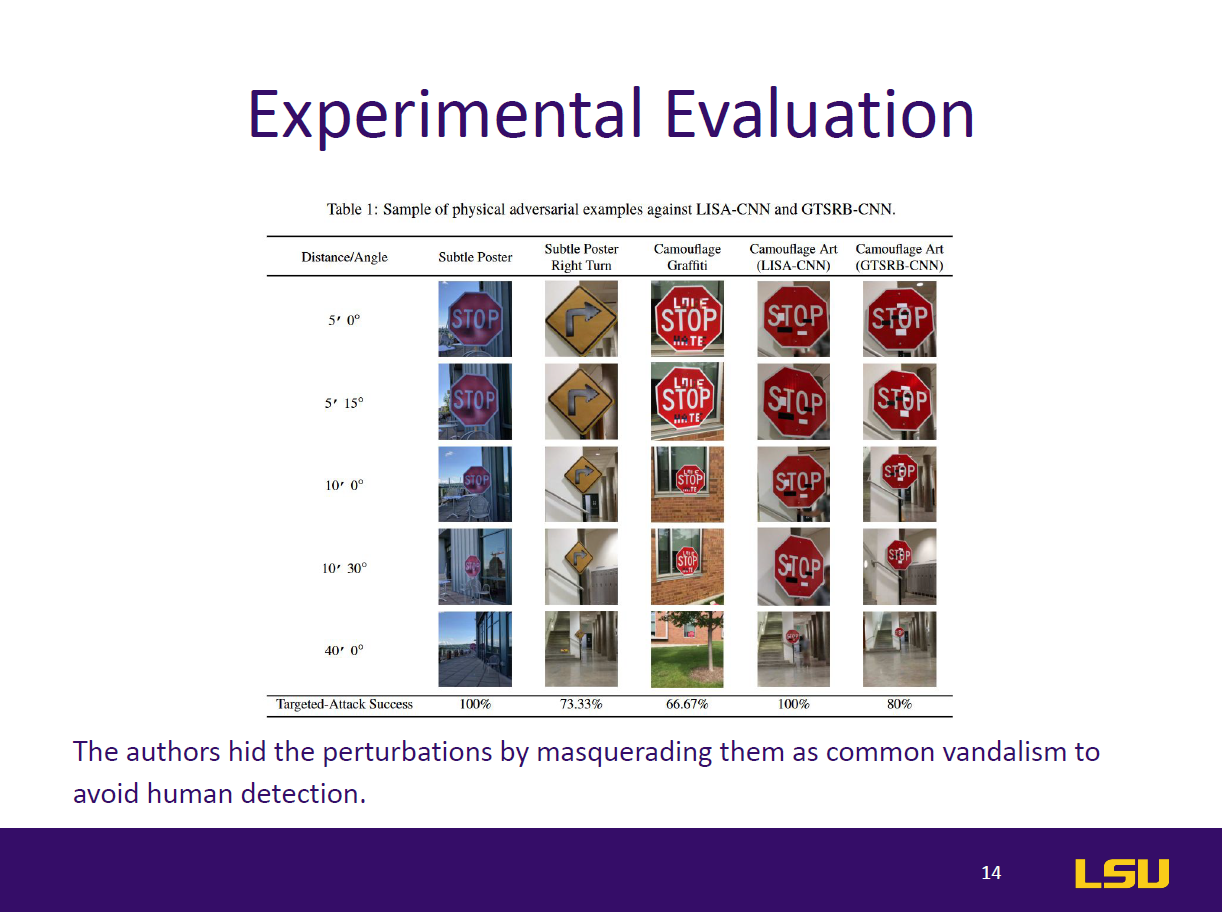

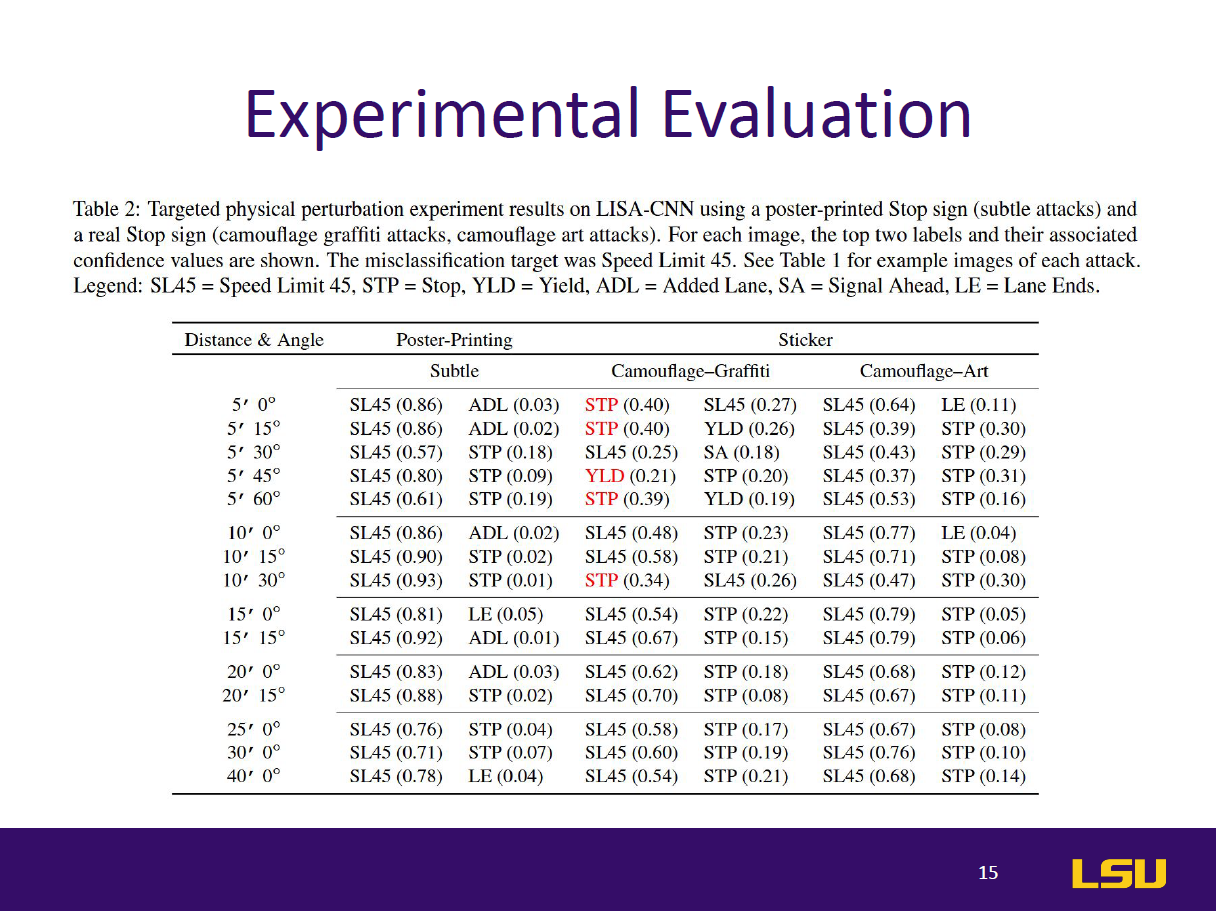

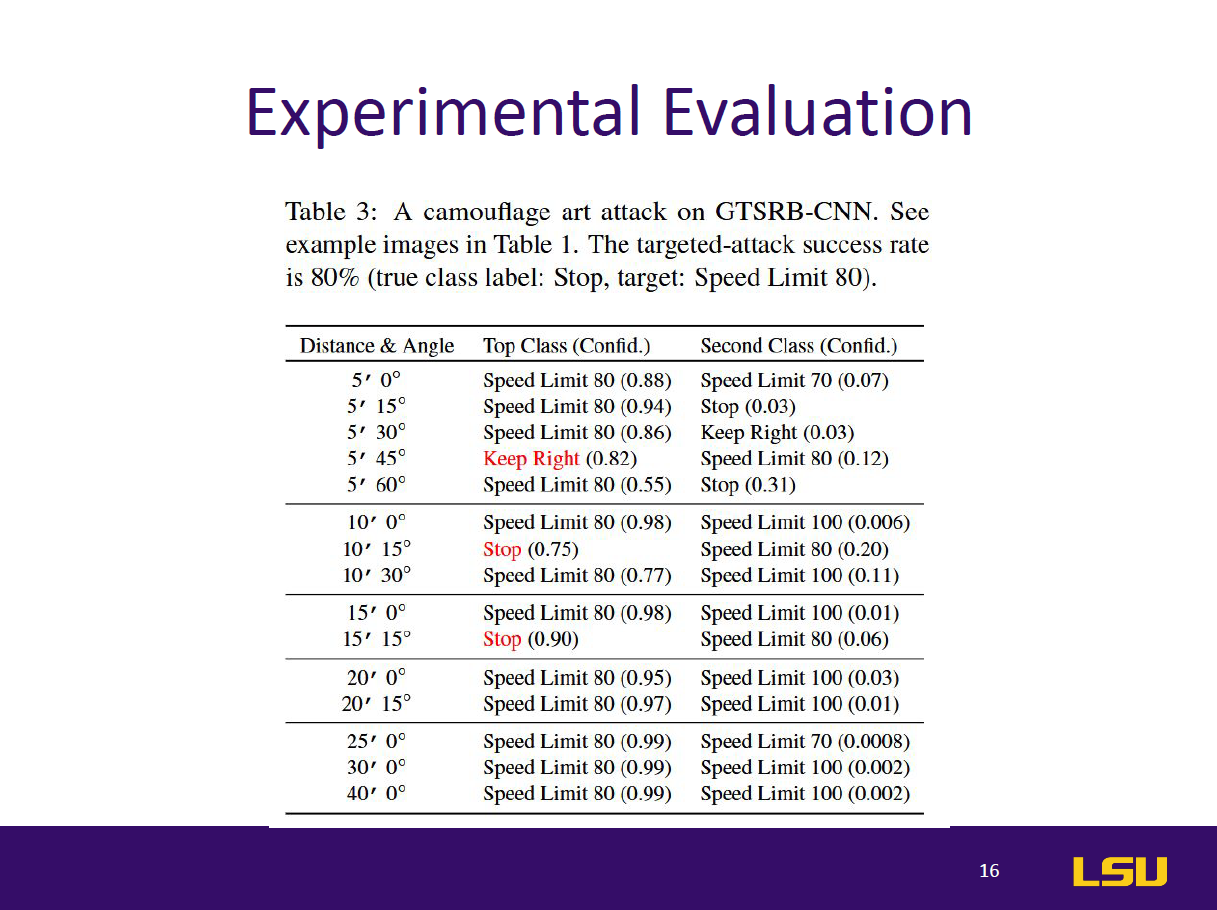

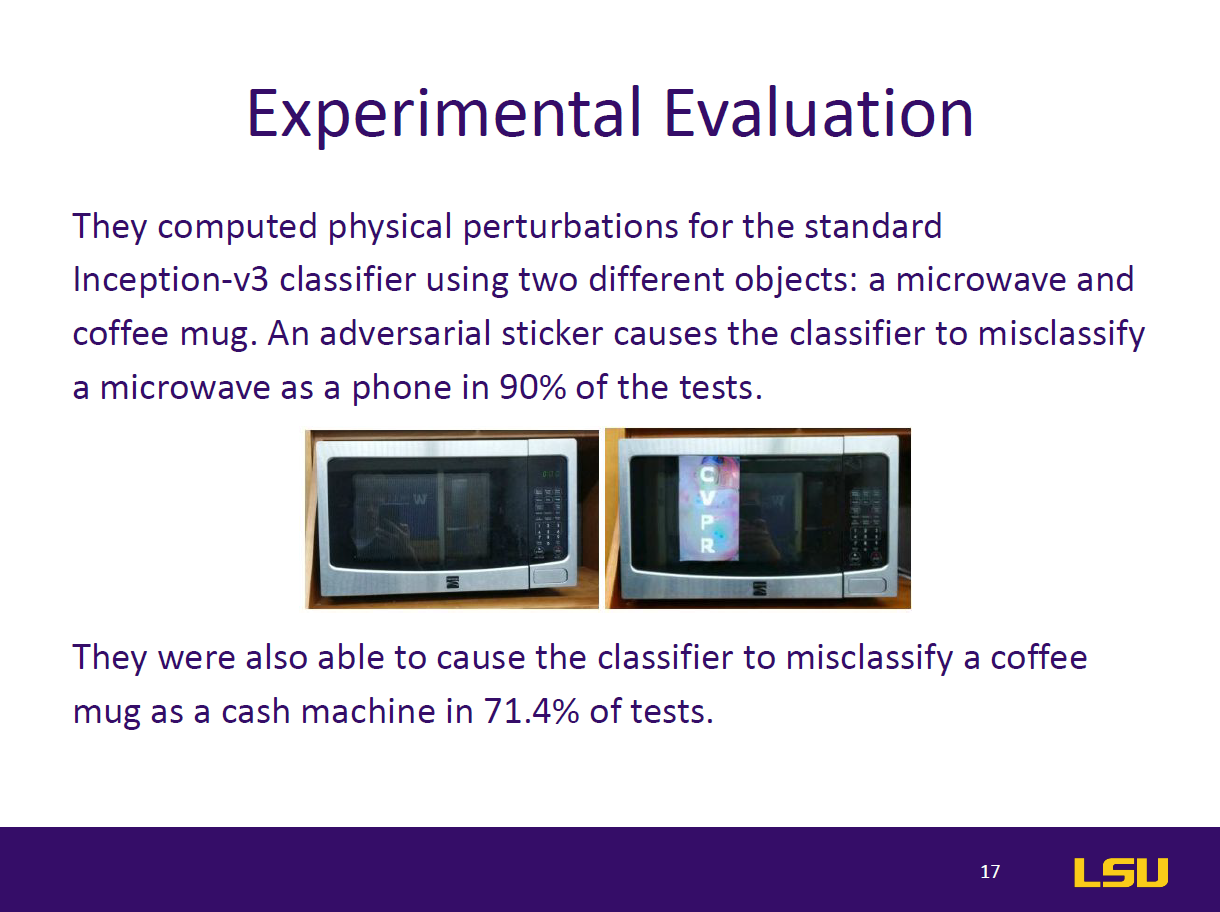

This paper introduces RP2 (Robust Physical Perturbation), a novel algorithm that generates physical adversarial perturbations capable of deceiving deep neural network (DNN) classifiers under real-world conditions. The authors target road sign classification, demonstrating how small, graffiti-like stickers applied to stop signs can mislead DNNs into classifying them as different signs (e.g., Speed Limit 45). These attacks remain effective despite environmental changes in lighting, angle, and distance. The study proposes a two-stage evaluation—lab and drive-by tests—to simulate real-world scenarios. Results show up to 100% targeted misclassification in lab tests and 84.8% in field tests. The paper also extends the attack to general objects like microwaves, which are misclassified as phones. By highlighting vulnerabilities in vision-based AI systems, this work emphasizes the urgent need for robust defenses against physical-world adversarial examples in safety-critical applications like autonomous driving.

Presentation Breakdown

Discussion and Class Insights

Q1: Do you believe the attacks outlined in this work are important to consider as refinement of autonomous vehicle behavior continues?

Q2: What do you believe is the solution to attacks such as sticker attacks on stop signs? Should the AI be trained to identify altered stop signs? Is it possible to train the AI on all types of alterations?

Ruslan Akbarzade: Ruslan emphasized that these types of attacks are important to study because they could impact vehicle behavior. He pointed out that the issue may not lie solely with the model—if the model is robust, it should ideally detect such anomalies. He also noted that even humans might misinterpret altered signs, suggesting that the fault doesn’t rest entirely with the system.

Obiora: Obiora proposed incorporating a cybersecurity agent within the vehicle’s system that can detect and flag potential adversarial attacks early. This agent would alert the system before the altered input misguides the model, acting as a defense mechanism in real-time.

Aleksandar: Aleksandar expressed skepticism about the continued relevance of such attacks, stating that advances in AI have likely made these specific methods outdated. However, he acknowledged the value of understanding past vulnerabilities to strengthen future systems.

Obiora: Obiora added that certain physical attributes—such as the distinct shapes of stop signs versus speed limit signs—could serve as helpful features for classification, even under adversarial attack conditions.

Professor: The professor highlighted the broader significance of the paper for future fully autonomous vehicles. He discussed how targeted attacks, such as those triggered to affect specific users, present serious risks. He praised the experimental design of the paper and stressed the importance of learning how to extract actionable insights from performance metrics and testing methodologies.

Audience Questions and Answers

Professor: What is the distinction between a classifier and a detector in the context of this study?

Answer: With no response, the professor clarified that a classifier identifies what an object is when the object is already isolated (e.g., determining that an image shows a stop sign). In contrast, a detector locates and identifies objects within a broader scene, making it more robust to real-world variability. This difference is significant in understanding the paper’s scope and limitations, as the study focused on classifiers rather than detectors.

Professor: Why did the authors choose the specific architectures (LISA-CNN and GTSRB-CNN) over more modern or complex models?

George: George suggested that the authors may have selected CNN-based classifiers because they were aware of existing vulnerabilities in such architectures. Simpler CNNs are still widely used and serve as a meaningful starting point to demonstrate the feasibility and generalizability of physical adversarial attacks.