CARLA: An Open Urban Driving Simulator

Authors: Alexey Dosovitskiy, German Ros, Felipe Codevilla, Antonio Lopez, Vladlen Koltun

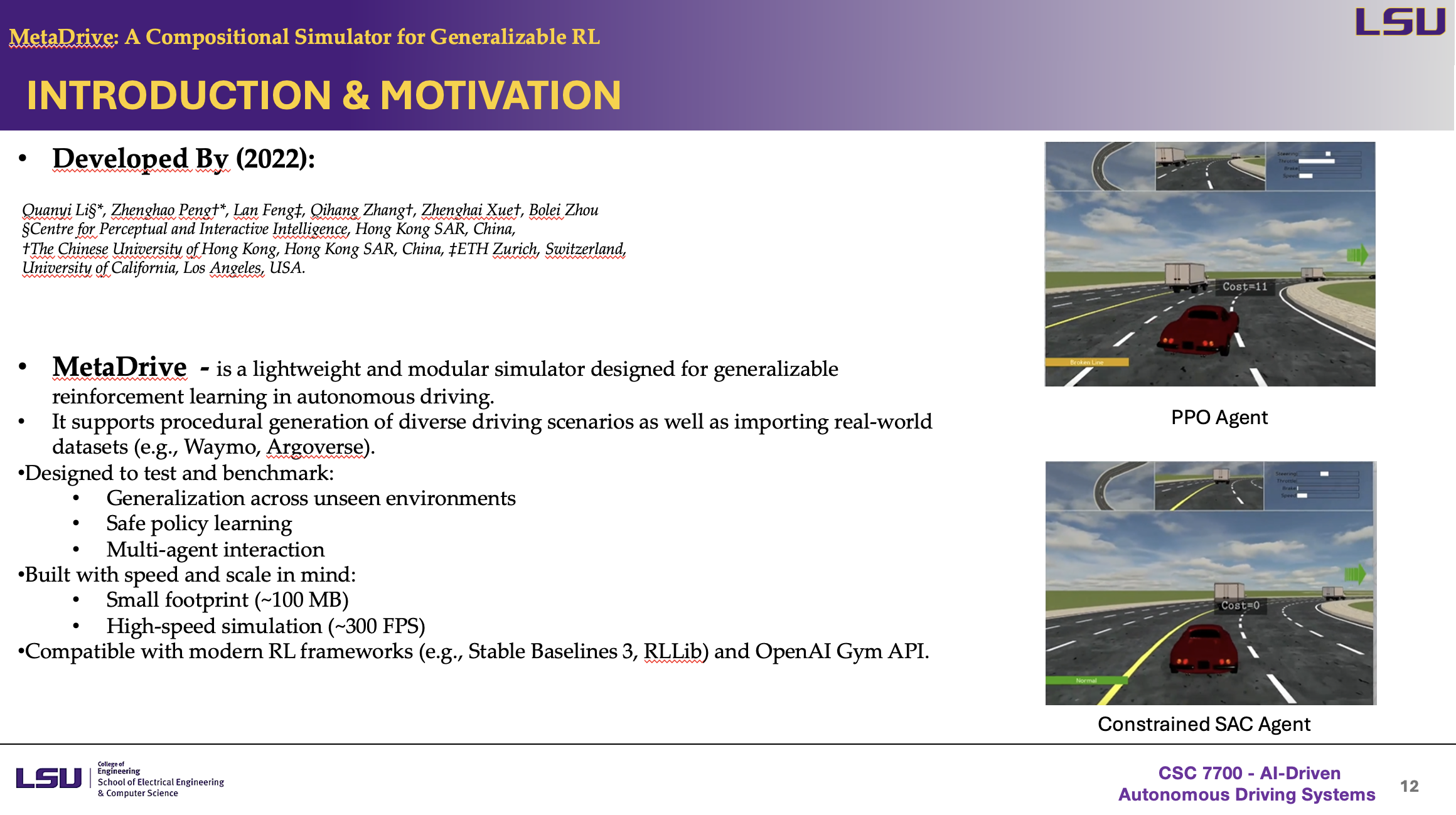

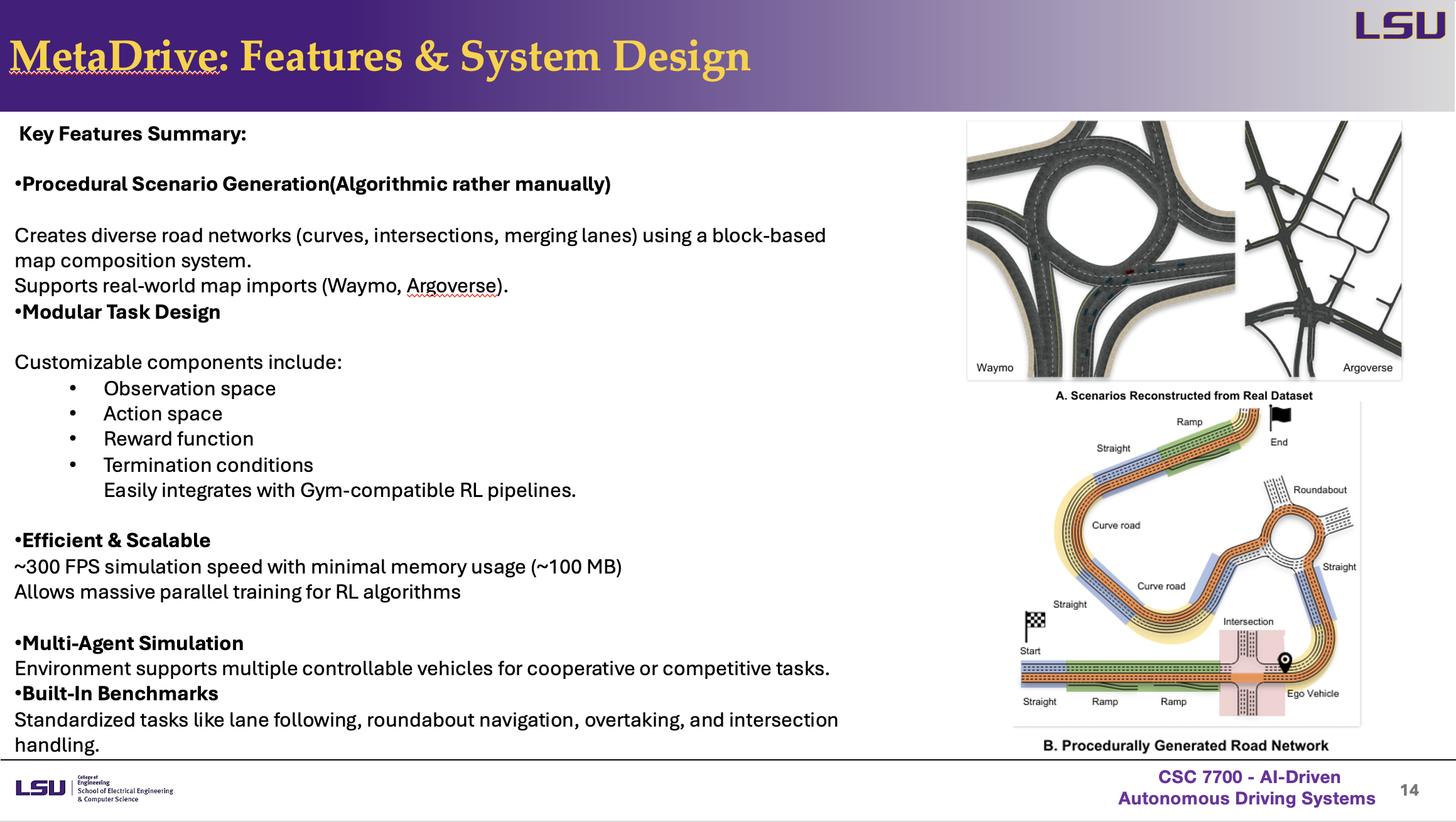

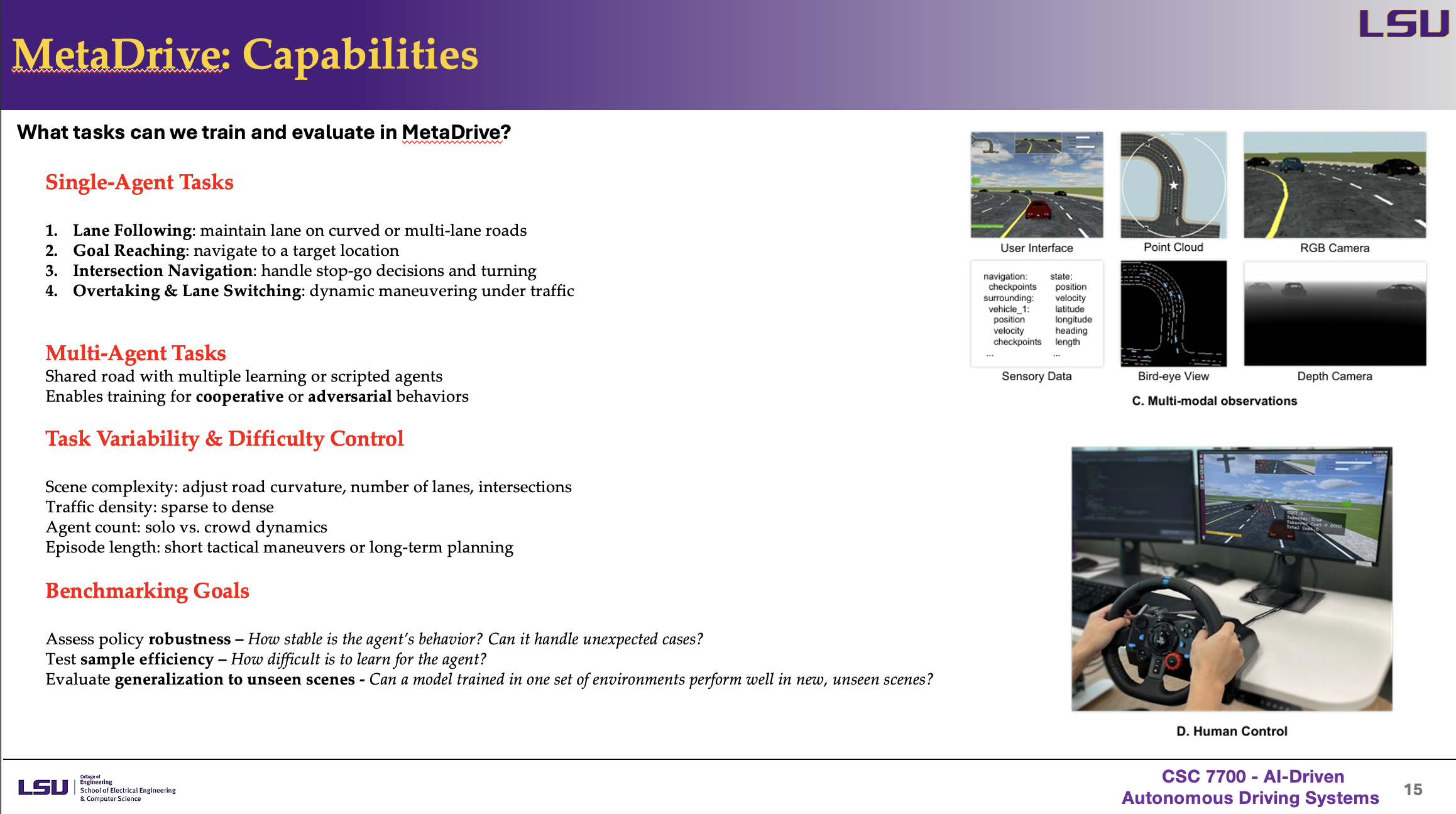

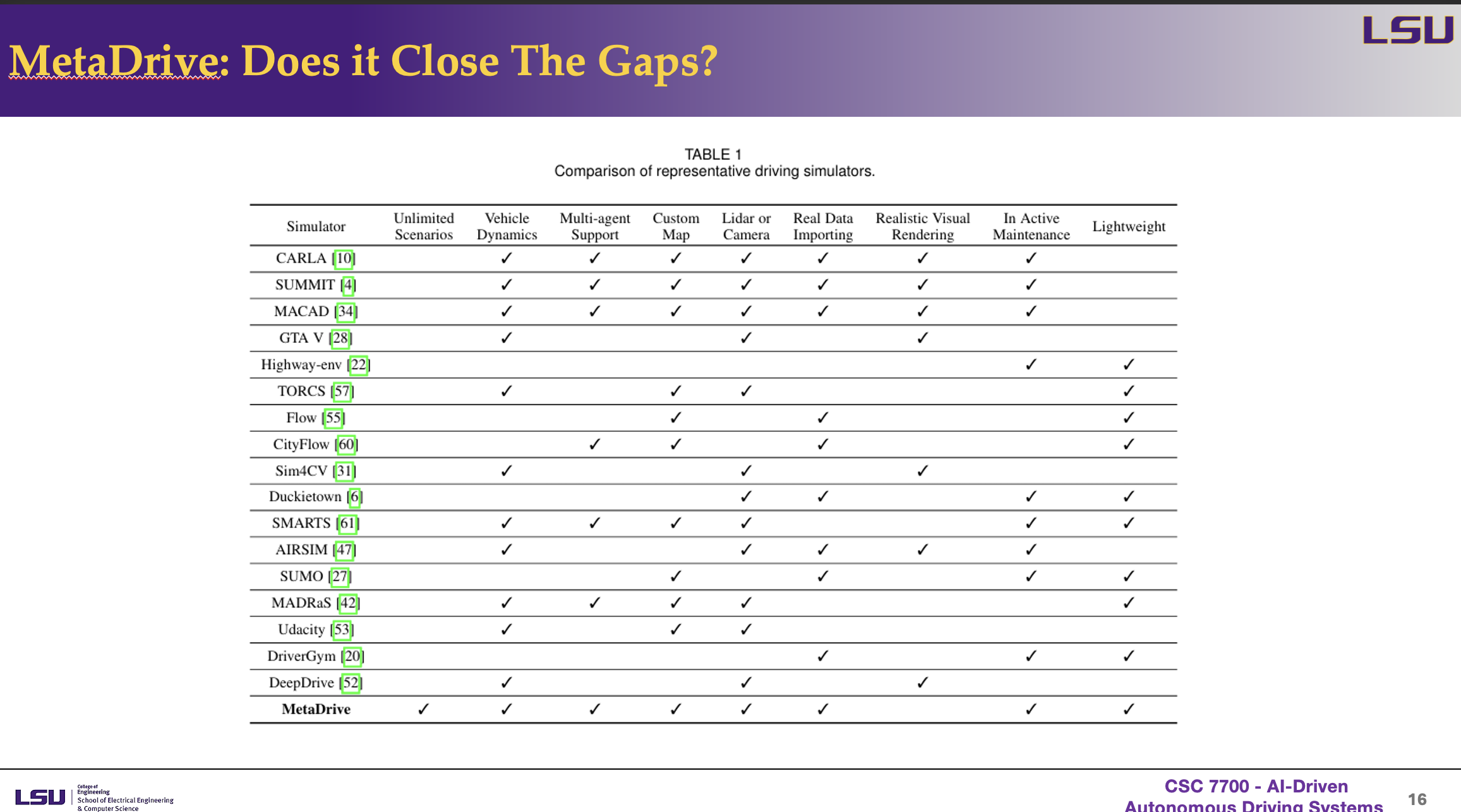

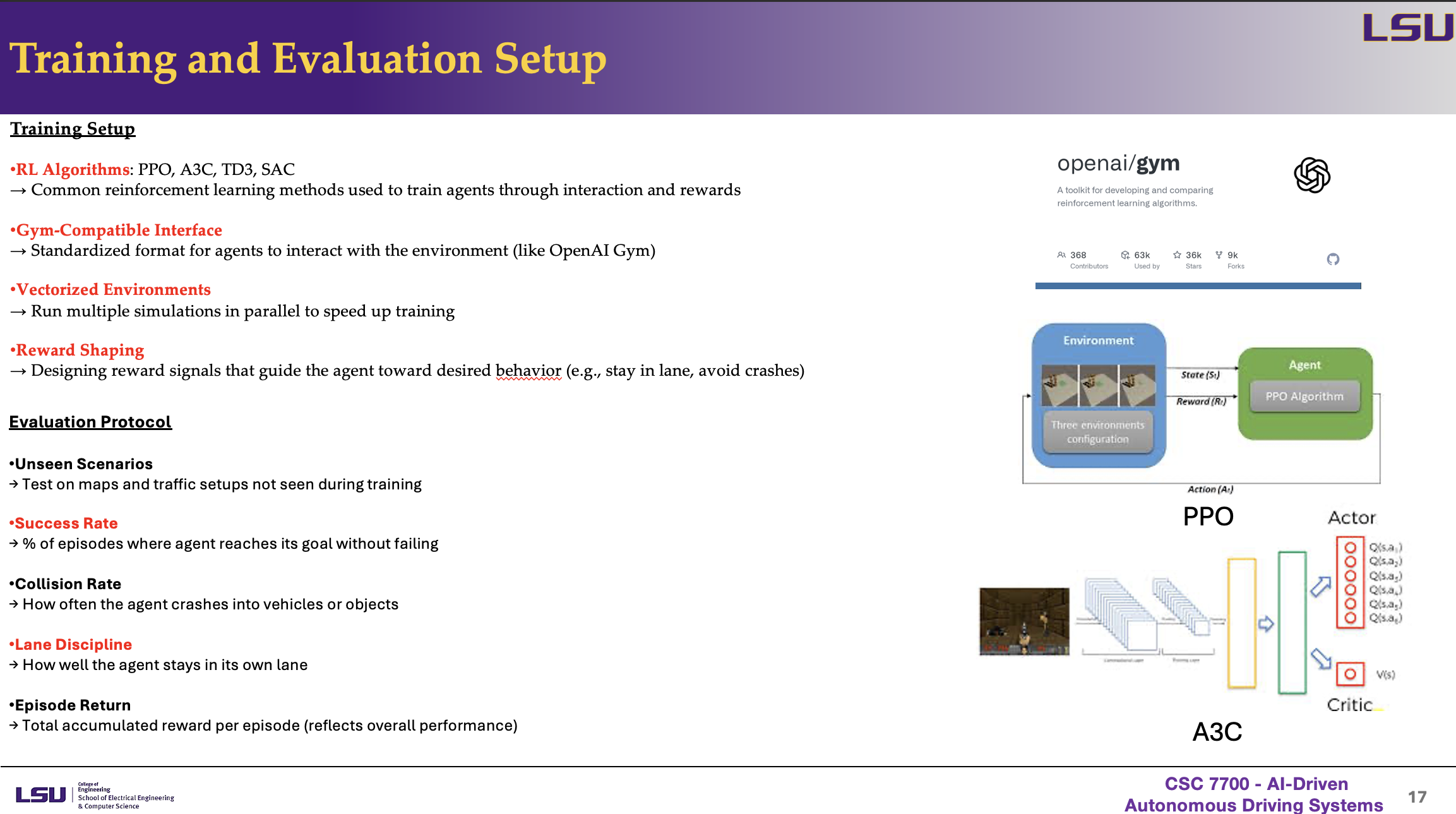

MetaDrive: Composing Diverse Driving Scenarios for Generalizable Reinforcement Learning

Authors: Quanyi Li§*, Zhenghao Peng†*, Lan Feng‡, Qihang Zhang†, Zhenghai Xue†, Bolei Zhou

Presentation by: Ruslan Akbarzade

Time of Presentation: April 15, 2025

Blog post by: Sujan Gyawali

Link to Carla: Read the Paper Link to MetaDrive: Read the Paper

Summary of the Paper

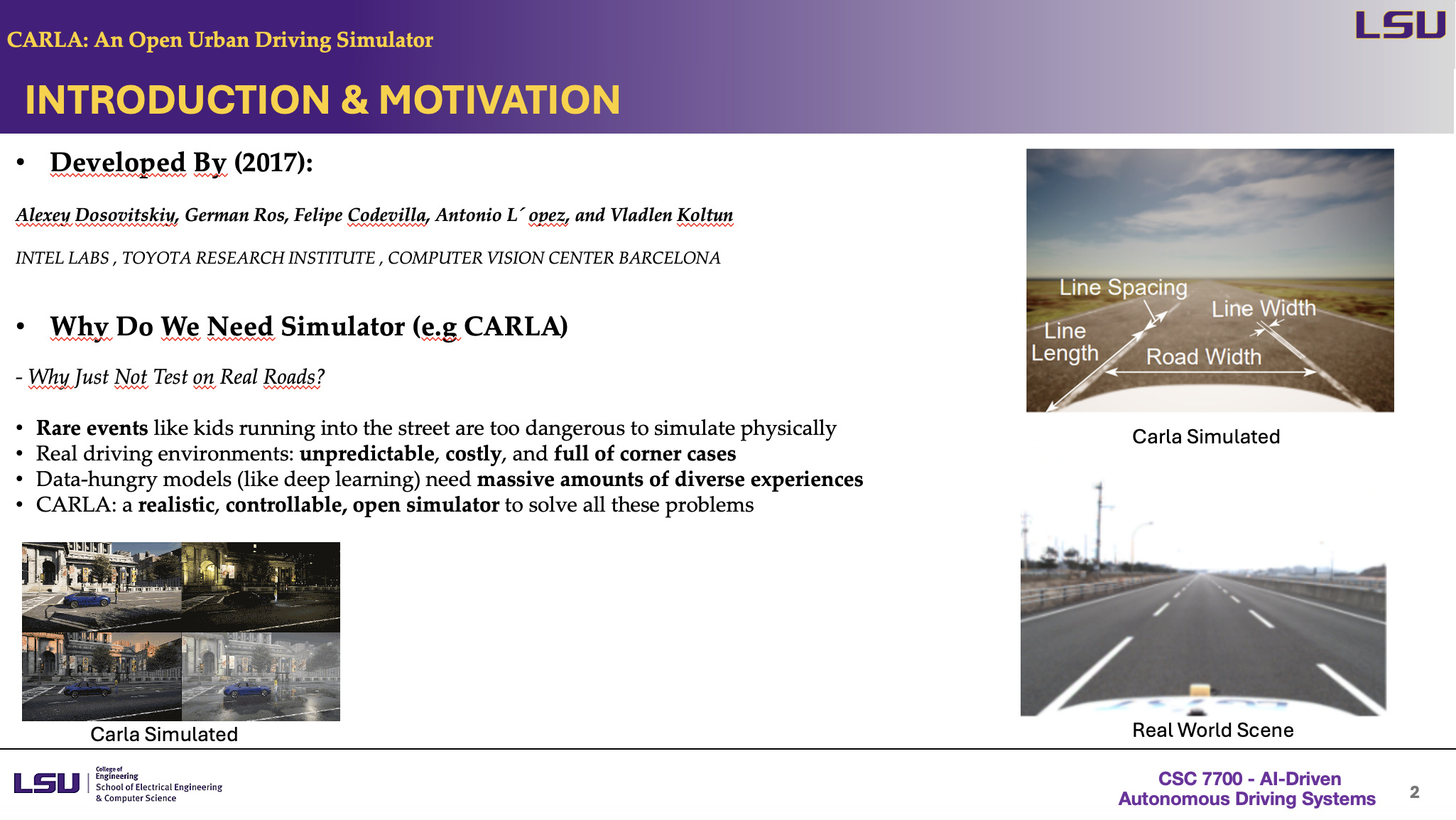

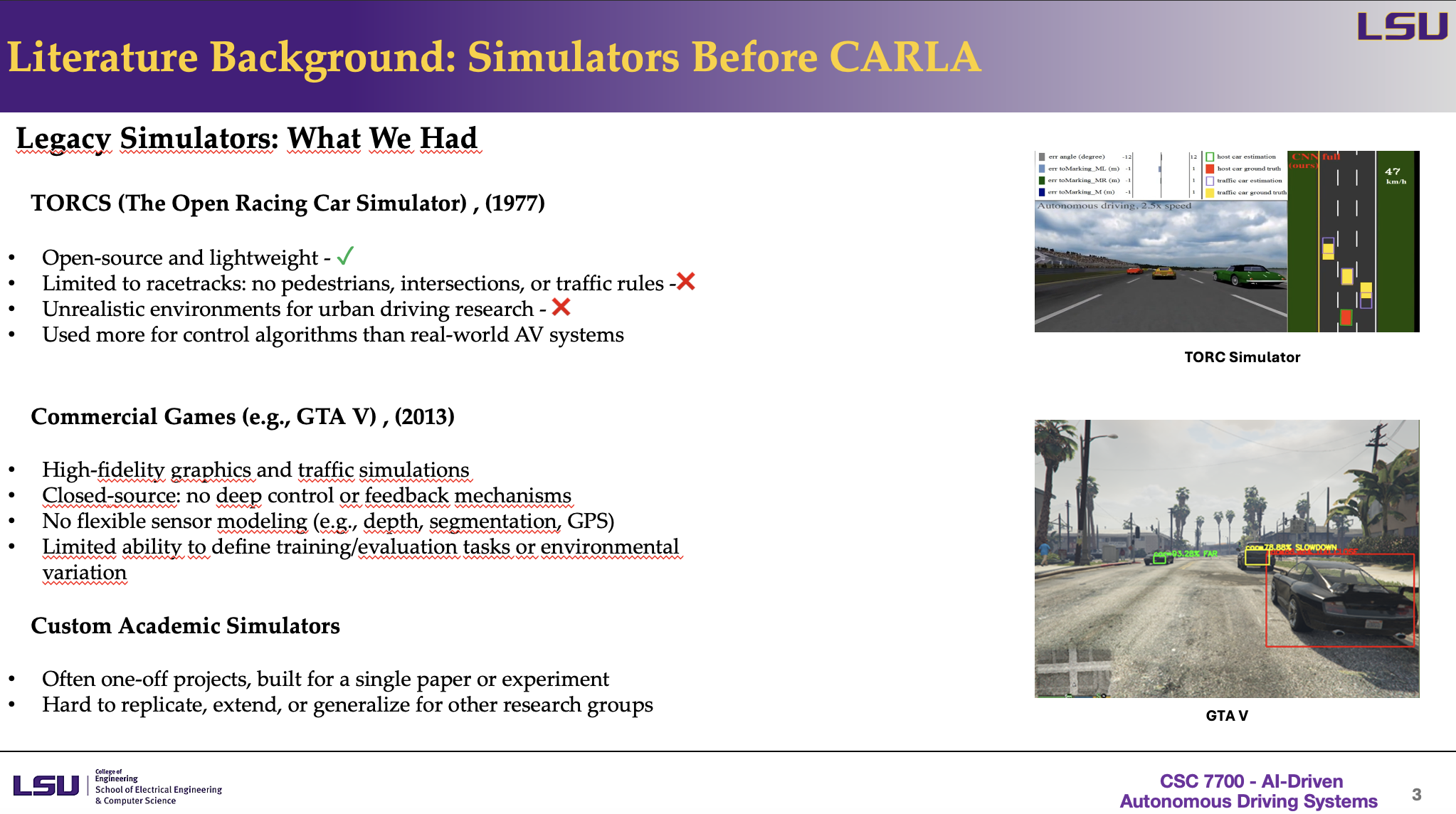

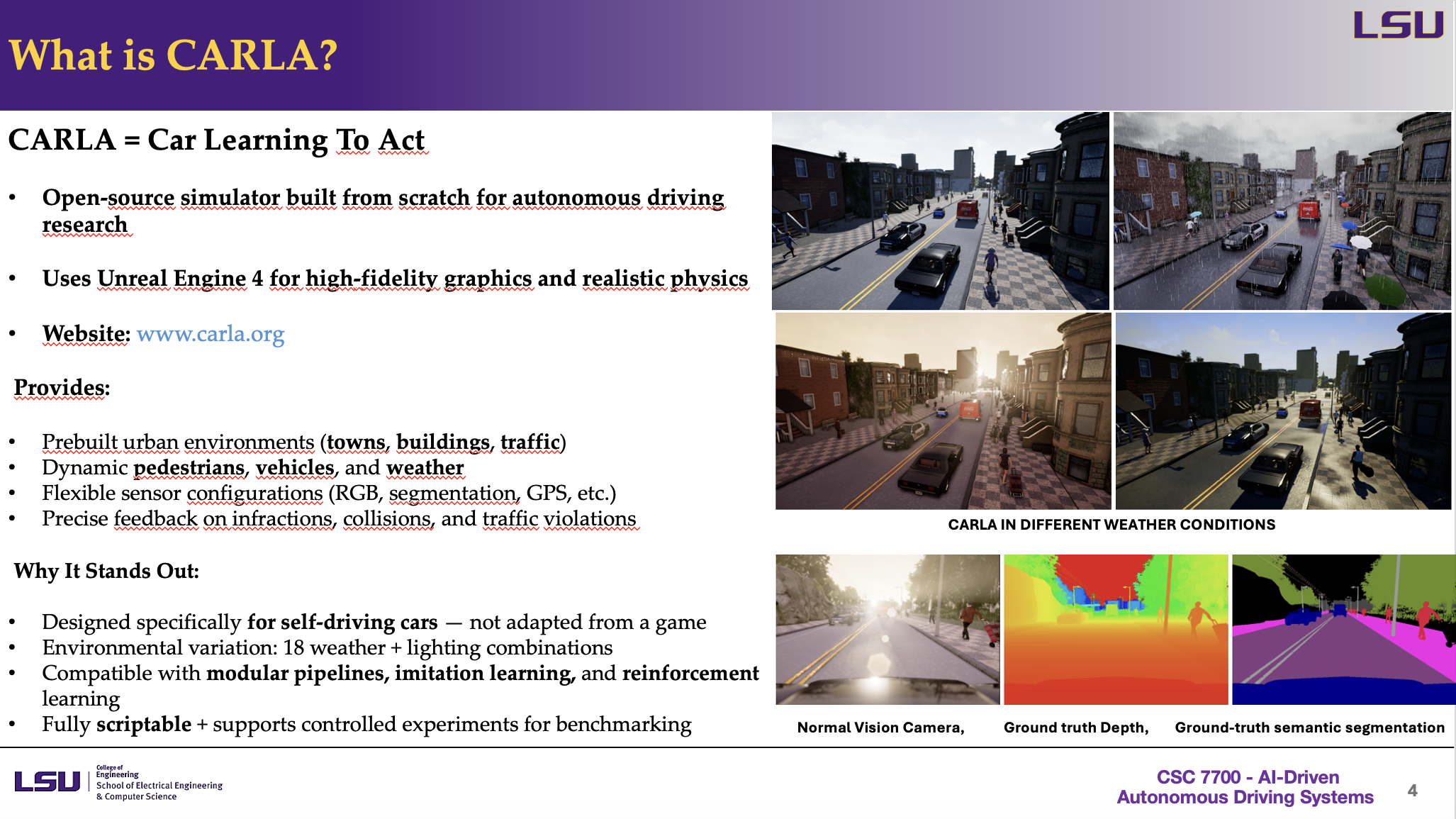

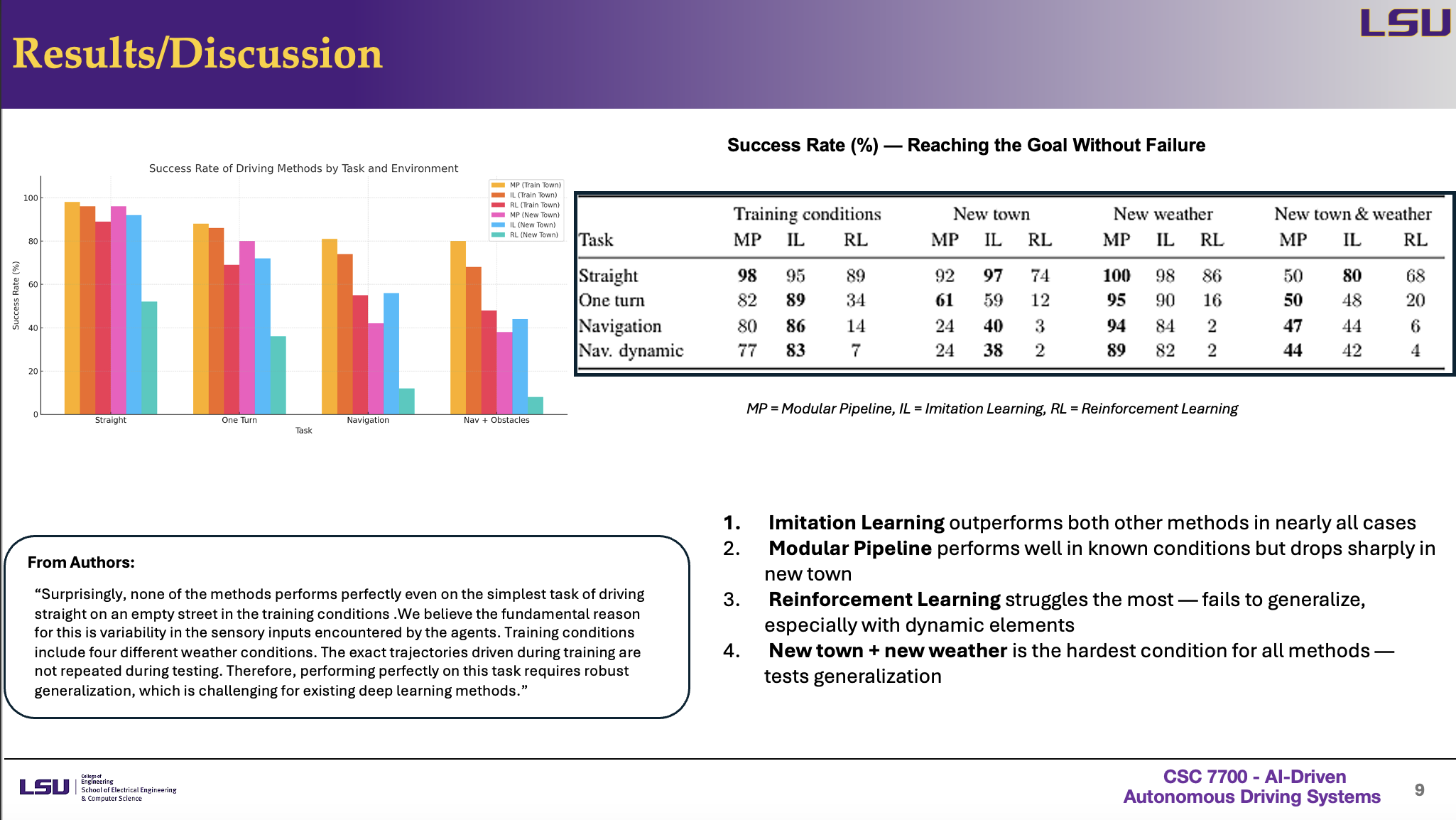

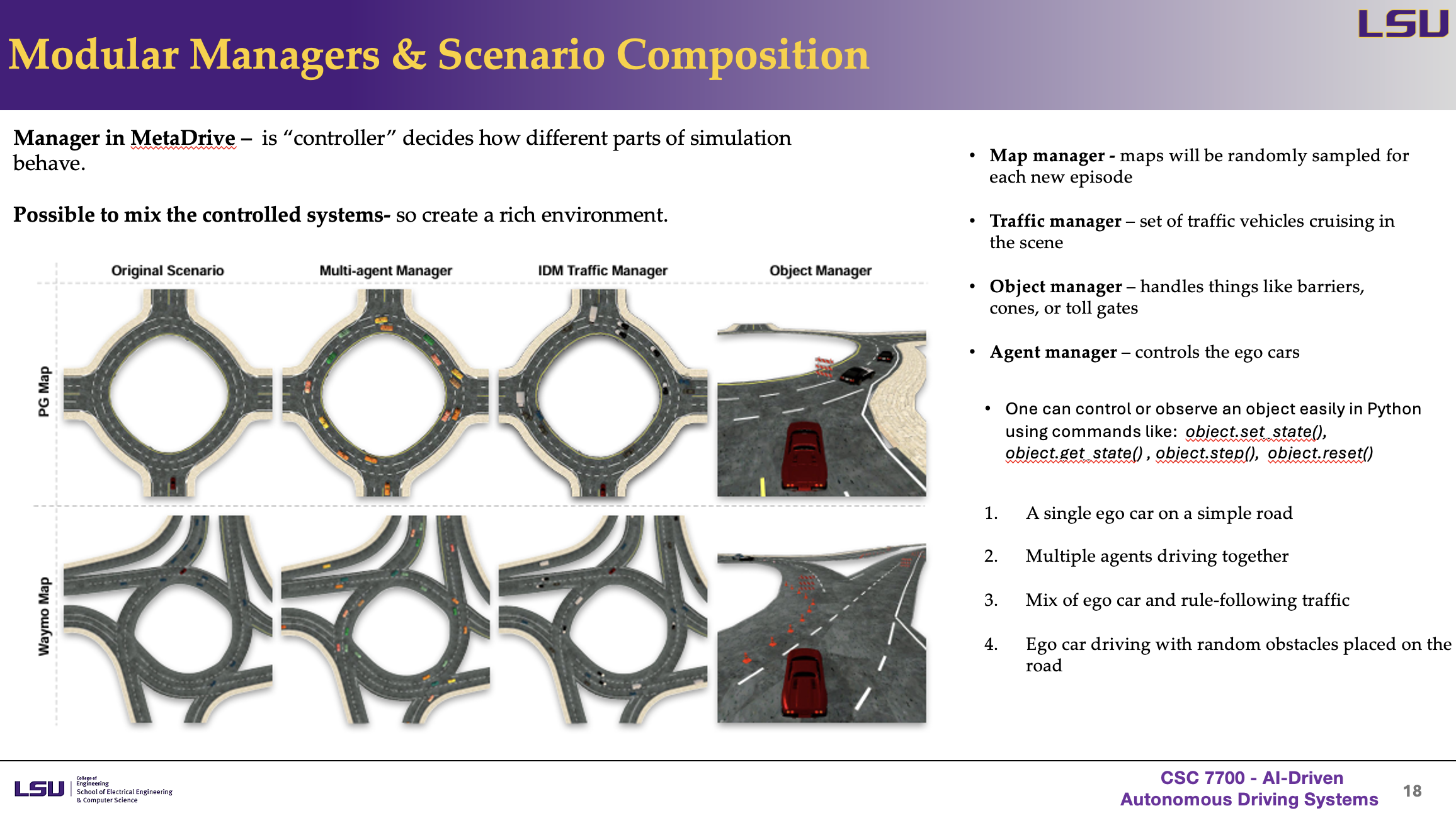

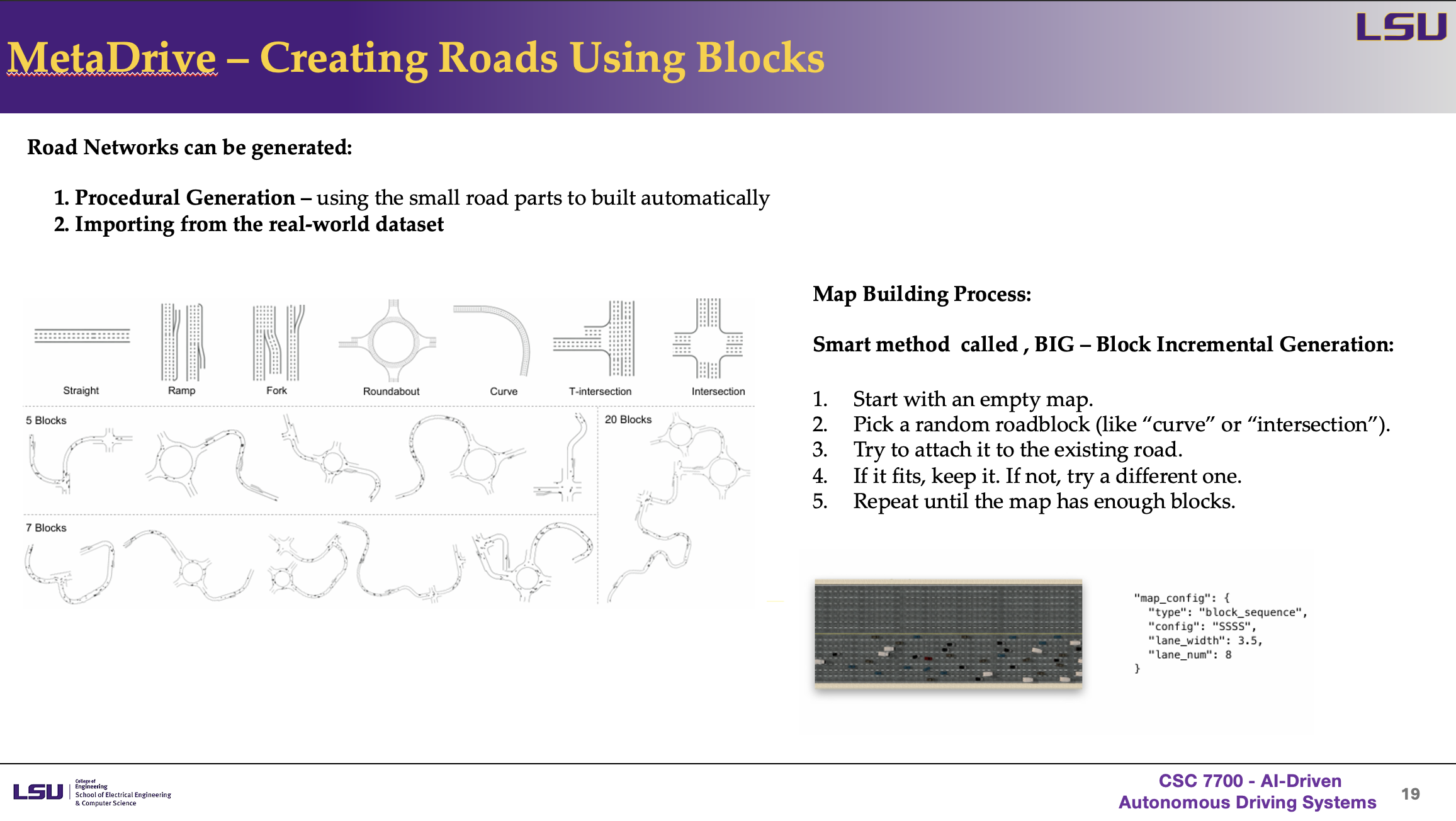

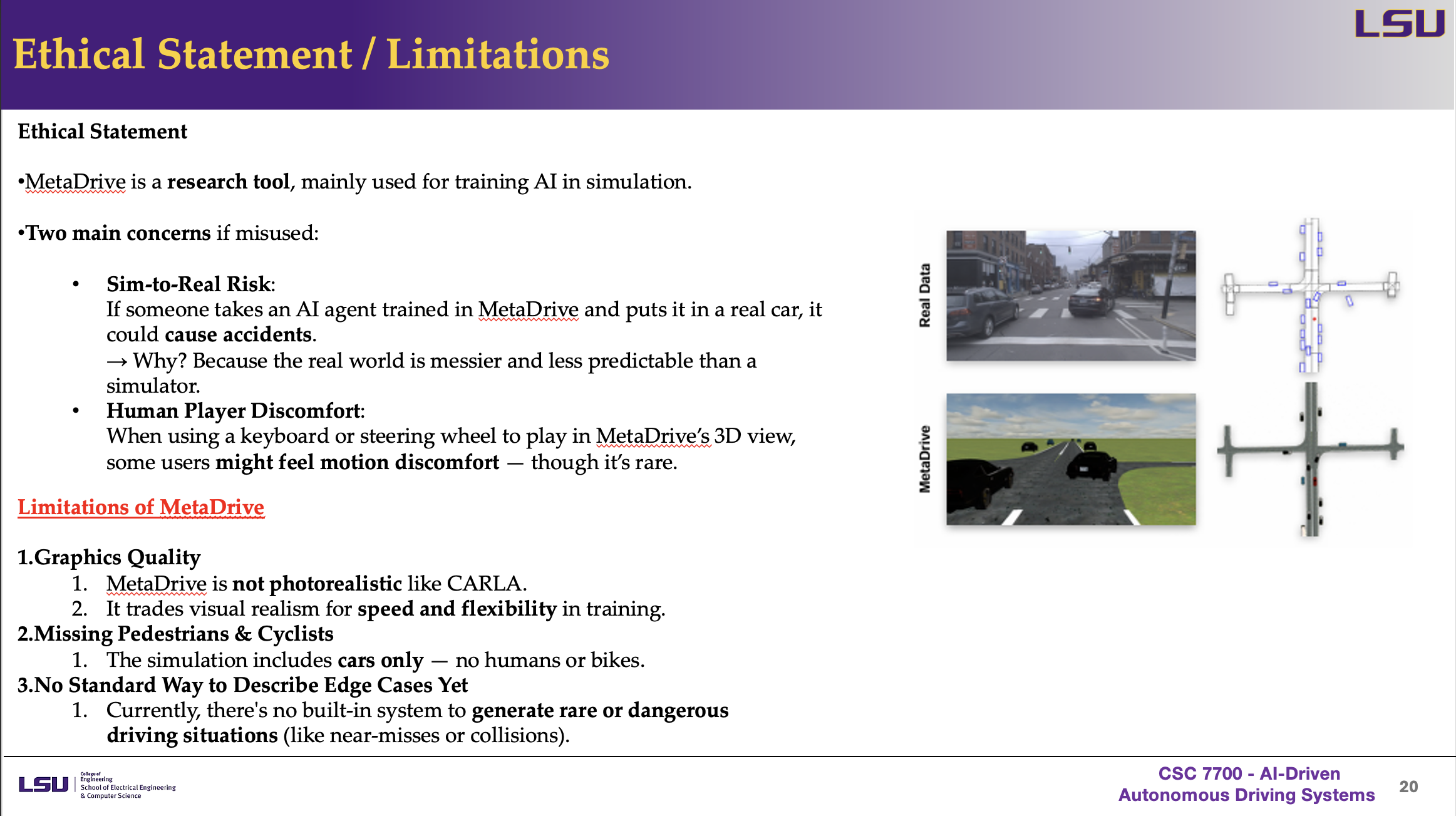

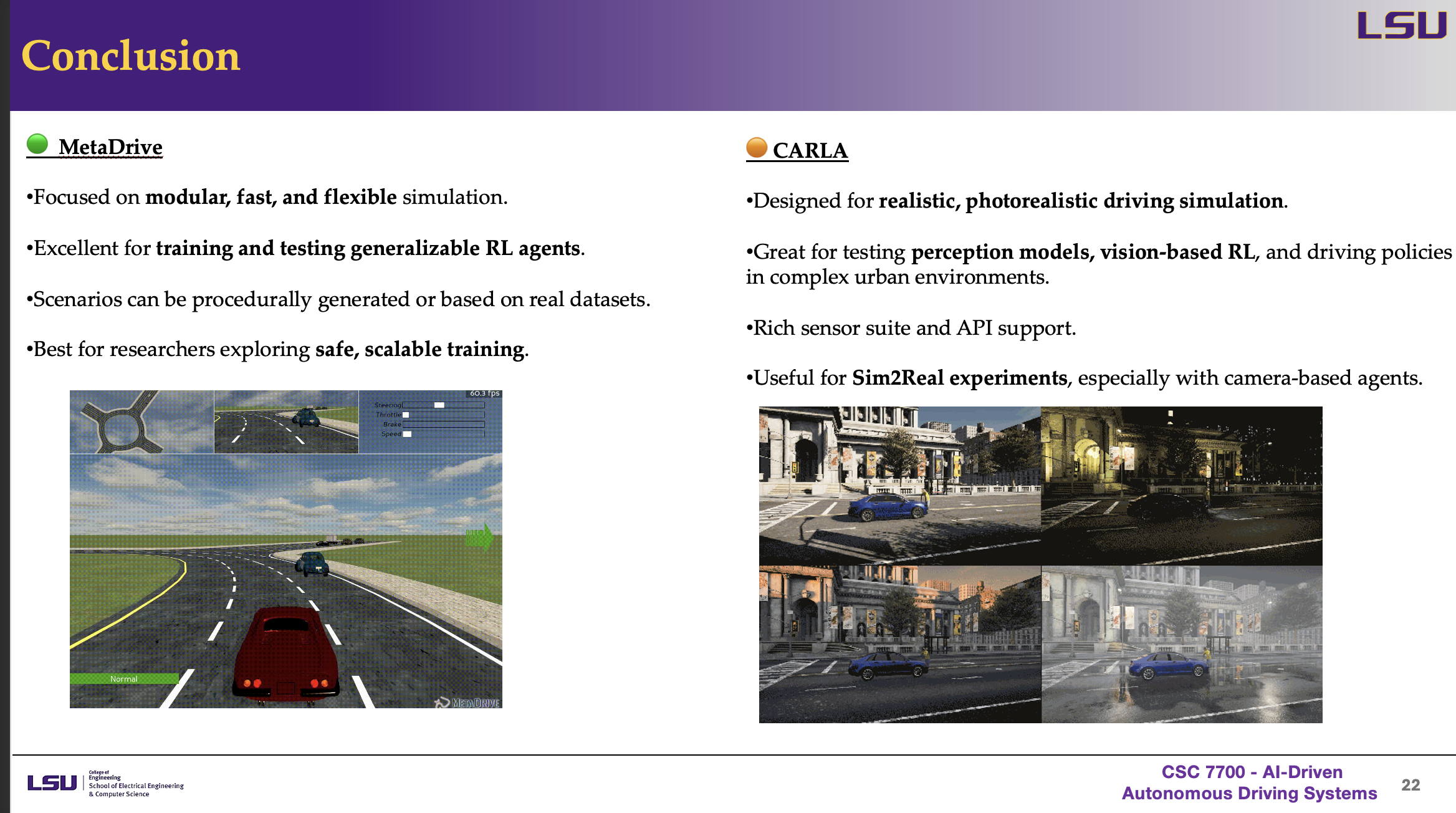

This presentation compares two autonomous driving simulators: CARLA and MetaDrive. CARLA focuses on high realism using rich visuals, sensor data, and complex traffic environments to support perception-based and imitation learning approaches. In contrast, MetaDrive emphasizes fast, scalable, and modular simulation for training reinforcement learning agents in diverse and procedurally generated scenarios. Together, they highlight the trade-offs between visual realism and training efficiency in developing generalizable self-driving systems.

Presentation Breakdown

Discussion and Class Insights

Q1: 1. You’re developing an AI system that autonomously drives ambulances through congested urban areas.The system must learn how to: – Handle unpredictable traffic – Make fast but safe decisions – React to edge cases like roadblocks or aggressive drivers Which simulator (CARLA or MetaDrive) would you choose to prototype and test this system, and why? What would be your testing priorities: realism, response time, or decision diversity?

Aleksandar: For the first case, I would choose CARLA. It lets me simulate different corner cases and hidden dangers using scripts. MetaDrive doesn’t support this kind of detailed scenario control, so it wouldn’t be as useful here.

George: I agree CARLA is a better choice for the first question because it has more visual detail and can simulate things like pedestrians more realistically.

Obiora: When I was listening to the presentation, I kept thinking, how can this be used in construction? I didn’t even know that CARLA has a construction environment that was new to me. I’ve actually been searching for simulation tools that are already set up for construction use.

Q2:MetaDrive doesn't include pedestrians or cyclists. CARLA has limited human-like behavior.If your agent is trained in a world where pedestrians don't exist, is it “fair” to expect it to handle them safely in the real world? Why? How can we include the effects of pedestrians in traffic?

Aleksandar: For the second question, I don’t think it’s fair to expect an agent trained without obstacles like pedestrians to perform safely in the real world. The real world is very different—if the agent has never seen those challenges during training, it won’t know how to react properly.

George: For the second question, if the training environment doesn’t include pedestrians, then the model won't know how to detect or react to them properly. That’s why it’s important to combine simulation data with real-world data, especially for rare or risky situations. This helps create a more balanced and complete training dataset. Ideally, the model should be trained on scenarios that include pedestrians so it can learn to handle them safely.

Audience Questions and Answers

No questions were asked during a presentation.