Deep Reinforcement Learning for Autonomous Driving: A Survey

Paper written by B. Ravi Kiran, Ibrahim Sobh, Victor Talpaert, Patrick Mannion, Ahmad A. Al Sallab, and Senthil Yogamani

Presented by Sujan Gyawali

Blog post by George Hendrick

Presented on April 10, 2025

Paper LinkBrief Summary:

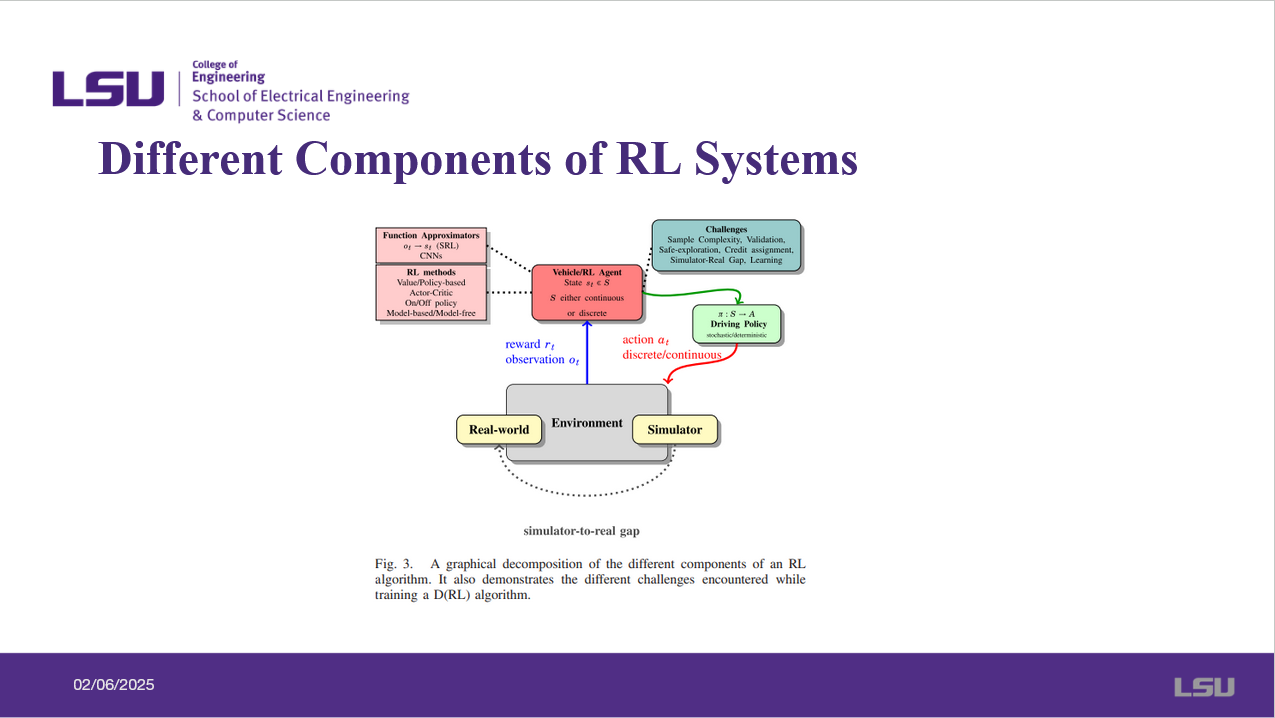

Sujan Gyawali presents the paper Deep Reinforcement Learning for Autonomous Driving: A Survey. The paper summarizes deep reinforcement learning algorithms and provides a taxonomy of automated driving tasks where these methods have been employed. The paper discusses the role of simulators in training agents, as well as methods to validate, test, and robustify existing solutions in RL.

Slides:

Reinforcement learning involves an agent learning by doing actions and receiving feedback. Deep reinforcement learning uses deep neural networks to assist the agent in understanding complex environments.

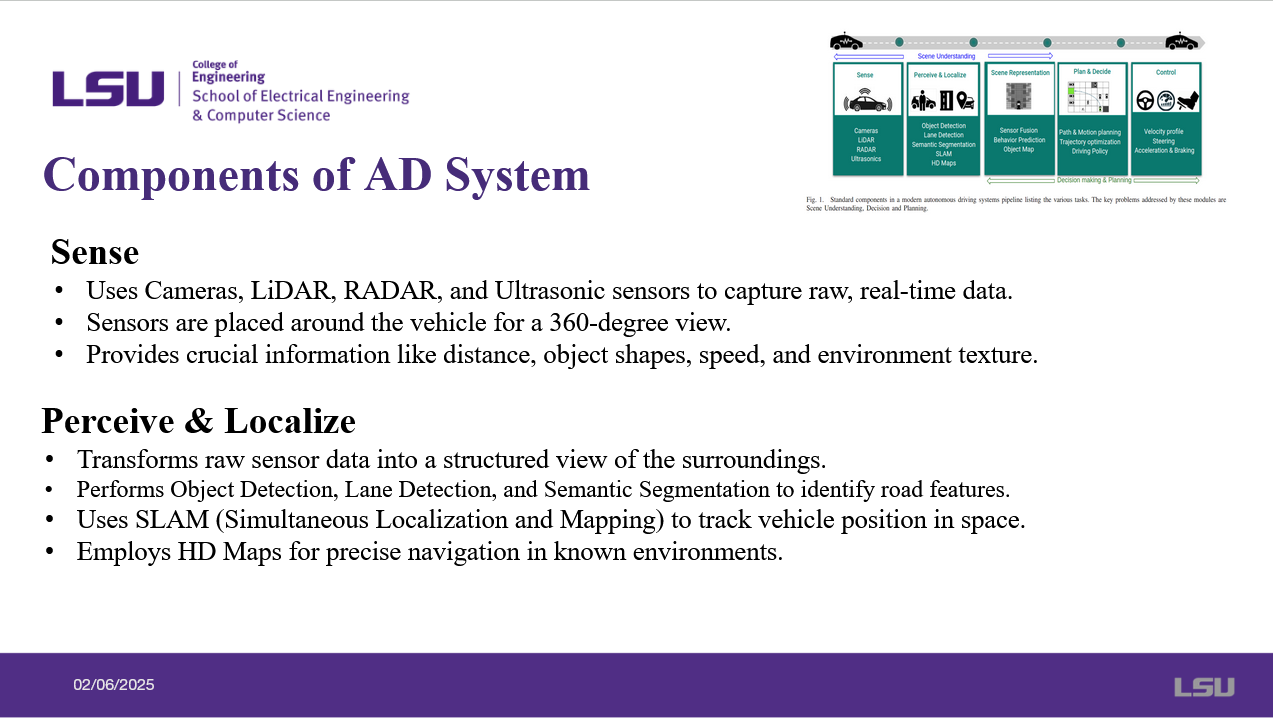

Autonomous driving systems involve components such as cameras, LiDAR, RADAR, and Ultrasonic sensors which are used to capture raw, real time data. Sensors are placed in a configuration that allows a 360 degree view, and these sensors provide critical information. The data from the sensors is then transformed into a structured view of the surroundings.

Sensor data is fused into a unified object map such as a bird's eye view. Behavior prediction can then be performed on dynamic objects such as cars or pedestrians. It handles sensor noise and uncertainty using probabilistic fusion techniques. Path and motion planning can then be determined such that safe and feasible routes can be created. Trajectory optimization is used to ensure smooth efficient driving and a driving policy is determined.

Introduces value based methods such as Q-Learning, which learns the Q-function and follows the best possible policy, and Deep Q-Networks, which combines Q-Learning with Deep Neural Networks to handle complex, high-dimensional input spaces.

Policy based methods involve learning the policy directly, instead of learning value functions like Q-values. The policy tells the agent what to take in each state. The policy is usually represented by a neural network and policy gradients are used to improve the policy step by step. Actor-critic methods is a combination of policy-based and value-based methods. The actor determines what action to take while the critic evaluates how much value the action had.

In model-based, the agent learns how the environment works and builds a model for how actions lead to rewards. Actions are planned and simulated before they are actually attempted, which helps reduce real-world interactions. In model-free, the agent instead lears from trial and error by interacting with the environment and collecting rewards.

On-policy methods involve the agent learning from their current behavior, improving the same policy it's using to act. Off-policy involes the agent learning from past behavior or from another policy.

In basic RL, values are stored for each state-action pair in table, which can cause issues in real-world problems when the number of states and actions is too large. DRL solves this using deep neural networks to learn from complex inputs, which helps handle large or continuous environments.

Extensions to RL include reward shaping, a technique which guides learning by adding extra rewards, and multiple-agent reinforcement learning, which involves multiple RL agents learning and acting in the same environment.

Multiple-object reinforcement learning handles situations where an agent must balance multiple goals at the same time, while learning from demonstrations allows an agent to learn how to act by copying examples from an expert.

State Space, Action Space, and Reward Functions are used to train self-driving cars using deep reinforcement learning. State Space involves what the car knows, Action Space gives information about the car's capabilities, and the Reward Function guides learning. Motion planning and trajectory optimization are applied to further determine a feasible path and consider static and dynamic elements.

Simulators are used with RL to train and test driving agents safely by simulating vehicle dynamics, sensors, environments, and state-action pairs.

Validating RL systems is difficult as different implementations, hyperparameters, and evaluations setups can lead to inconsistent results. It's difficult to know if a policy is truly effective or just overfitting to a specific setup. Simulators are used to improve safety as well as offering cheap, safe, and labeled data for training RL models.

RL agents need a huge number of trials to learn good policies, and reward shaping helps the agent learn faster by giving more frequent feedback. Intrinsic reward functions can help guide learning based on curiosity and prediction errors. The agent rewards itself for exploring new or surprising situations.

Imitation learning uses expert demonstrations to train an agent, but the expert may not visit all possible states the agent might face. DAgger helps by letting the agent act, then asking the expert to label those new states so the training set includes both expert and agent experiences. Likewise, MARL helps assist vehicles coordinate in complex situations.

Agents have to learn safety, especially when handling unpredictable traffic or pedestrians. Safe DAgger adds a safety policy to predict mistakes of the main policy by avoiding unsafe actions without constantly relying on expert feedback.

RL is still a growing field in autonomous driving and is limited to public datasets and practical guidance. This paper organizes and reviews key RL methods and challenges in driving tasks. There are many challenges such as sample efficiency, safety, and bridging the gap between simulation and reality.

Sujan believes combining trial-and-error learning with deep neural networks can help self-driving cars learn complex tasks. The paper had comprehensive coverage as well as a real world focus, however the paper had limited experimental results as well as heavy on technical language.

Later work involved a survey which focused on the latest advancements in behavior planning using reinforcement learning and another review on safe reinforcement learning for autonomous driving.

Q&A:

Question: Aleksander asked what is the difference between an agent and a model in this context?

Answer: The presenter responded that the agent is the entity that interacts with the environment, observes it, and performs actions to maximize its reward. The model is the representation of the environment the agent uses for learning and planning.

Discussion:

Question 1: In a future city full of driverless cars, how do you think they'll "talk" to each other? Could multi-agent RL by the key to traffic harmony or chaos?

Aleksandar: Asserted belief of the importance to optimize traffic, and agrees multi-agent RL could be effective.

Professor: Added that there are public concerns about using reinforcement learning. The goal is to reach optimization to minimize cost and improve efficiency and time, as well as reduct cost, but reinforcement learning needs a long time to optimize the process.

Question 2: Would you prefer a car that learns by exploring the world or one that builds a mental model first? Why might that choice matter in real-time traffic.

Aleksandar: Agrees that real world learning could cause dangerous consequences.