DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving

Authors: Chenyi Chen, Ari Seff, Alain Kornhauser, Jianxiong Xiao

Presentation by: Ruslan Akbarzade

Time of Presentation: February 11, 2025

Blog post by: Sujan Gyawali

Link to Paper: Read the Paper

Summary of the Paper

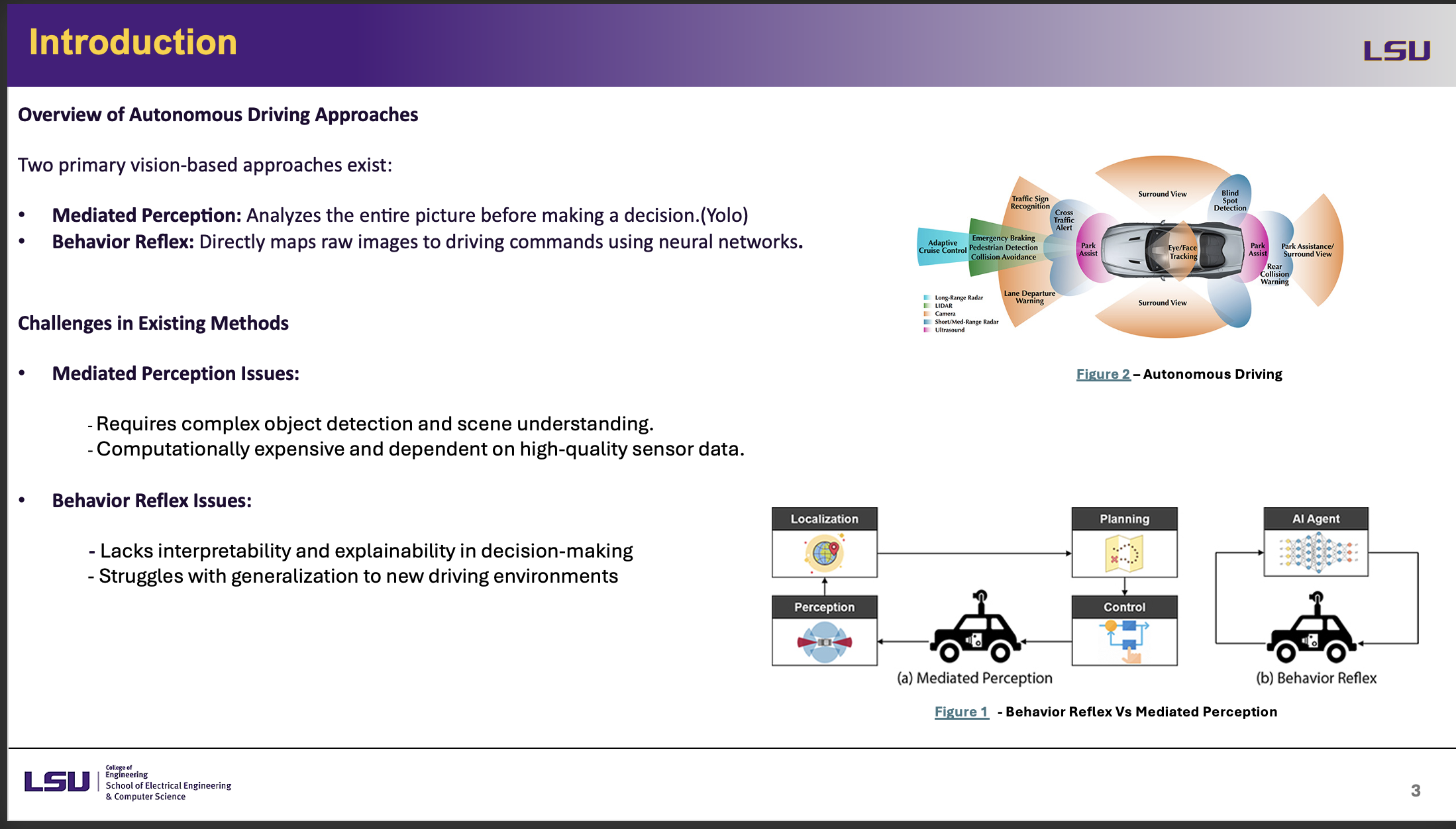

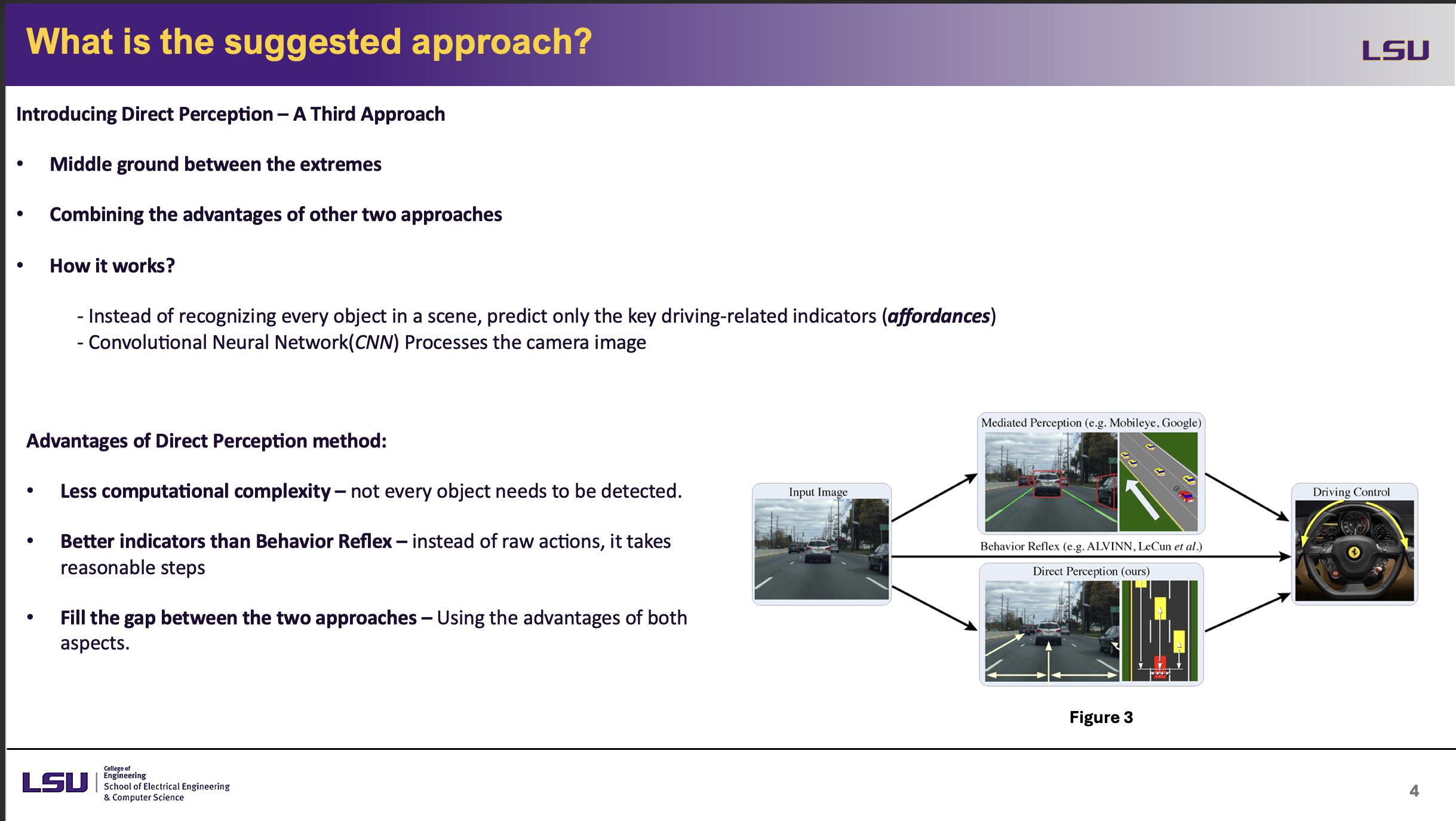

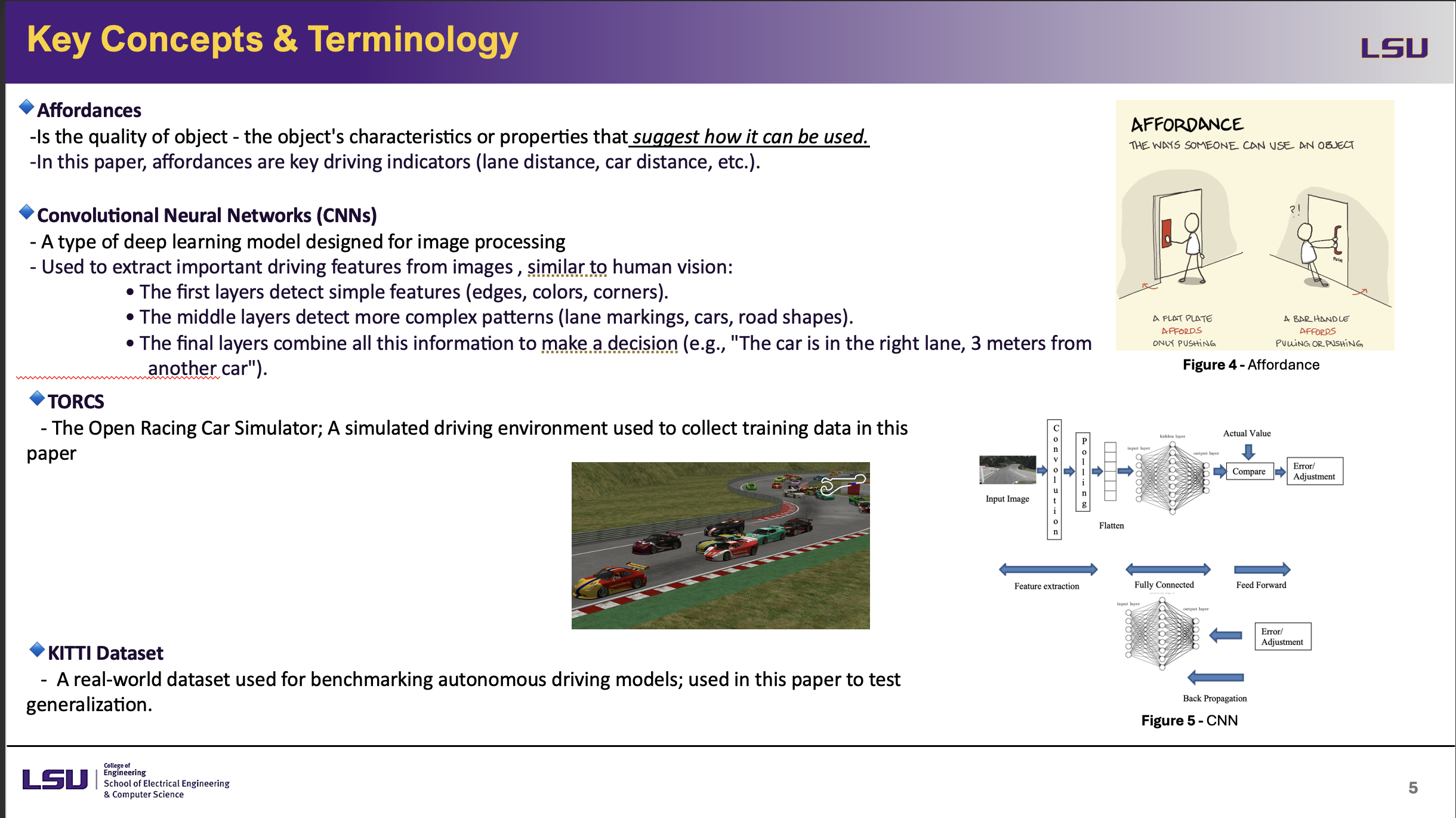

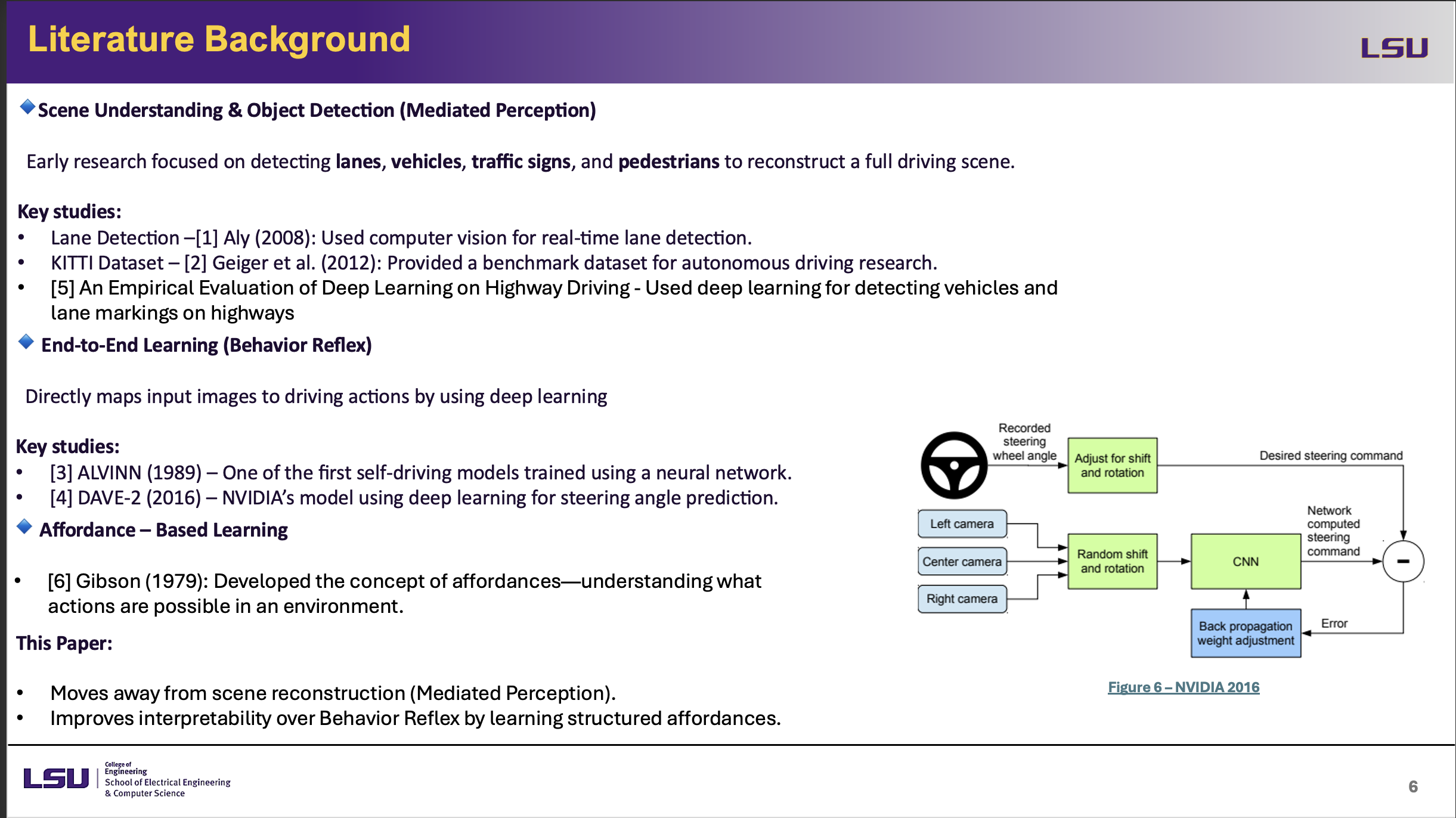

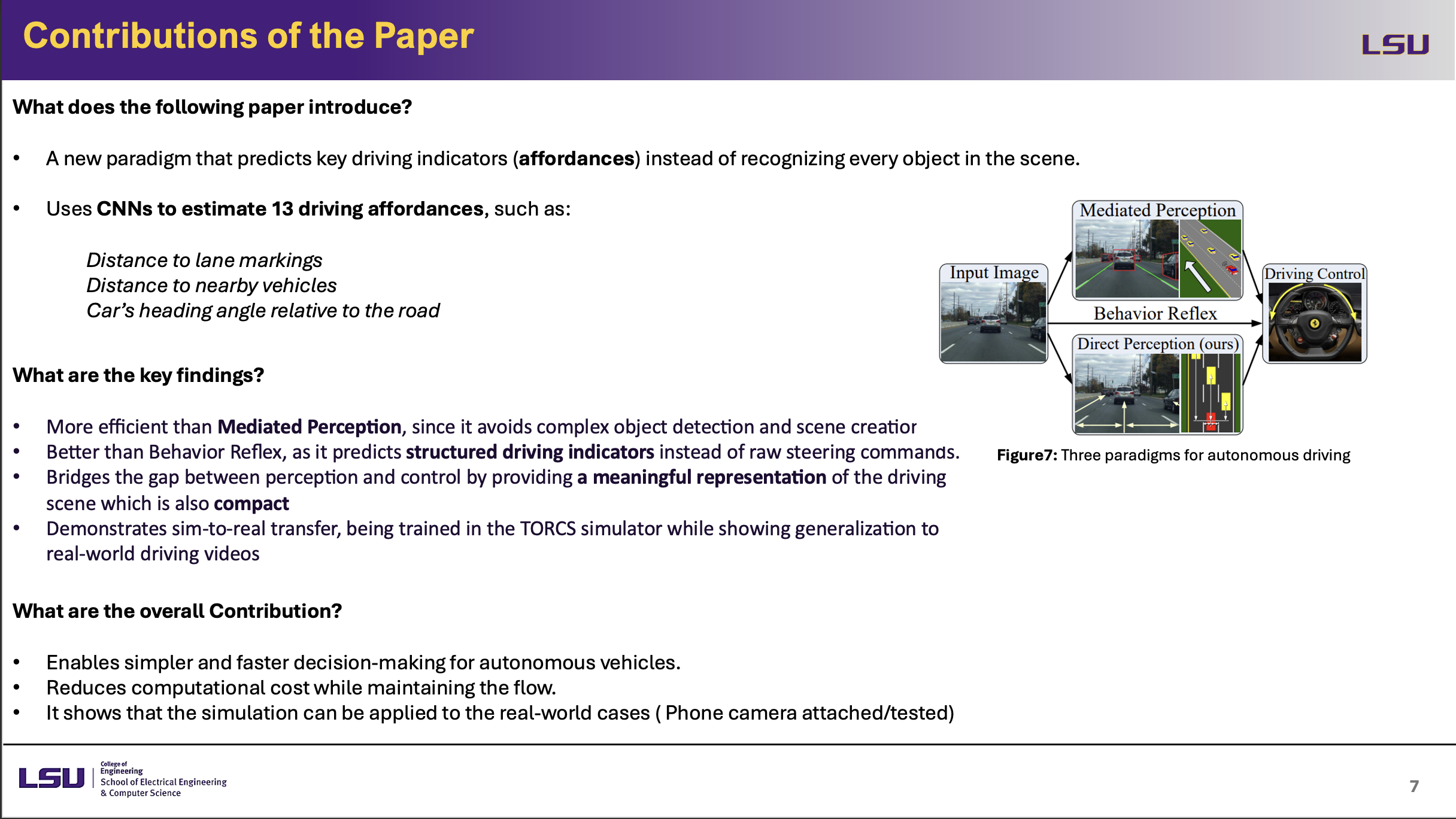

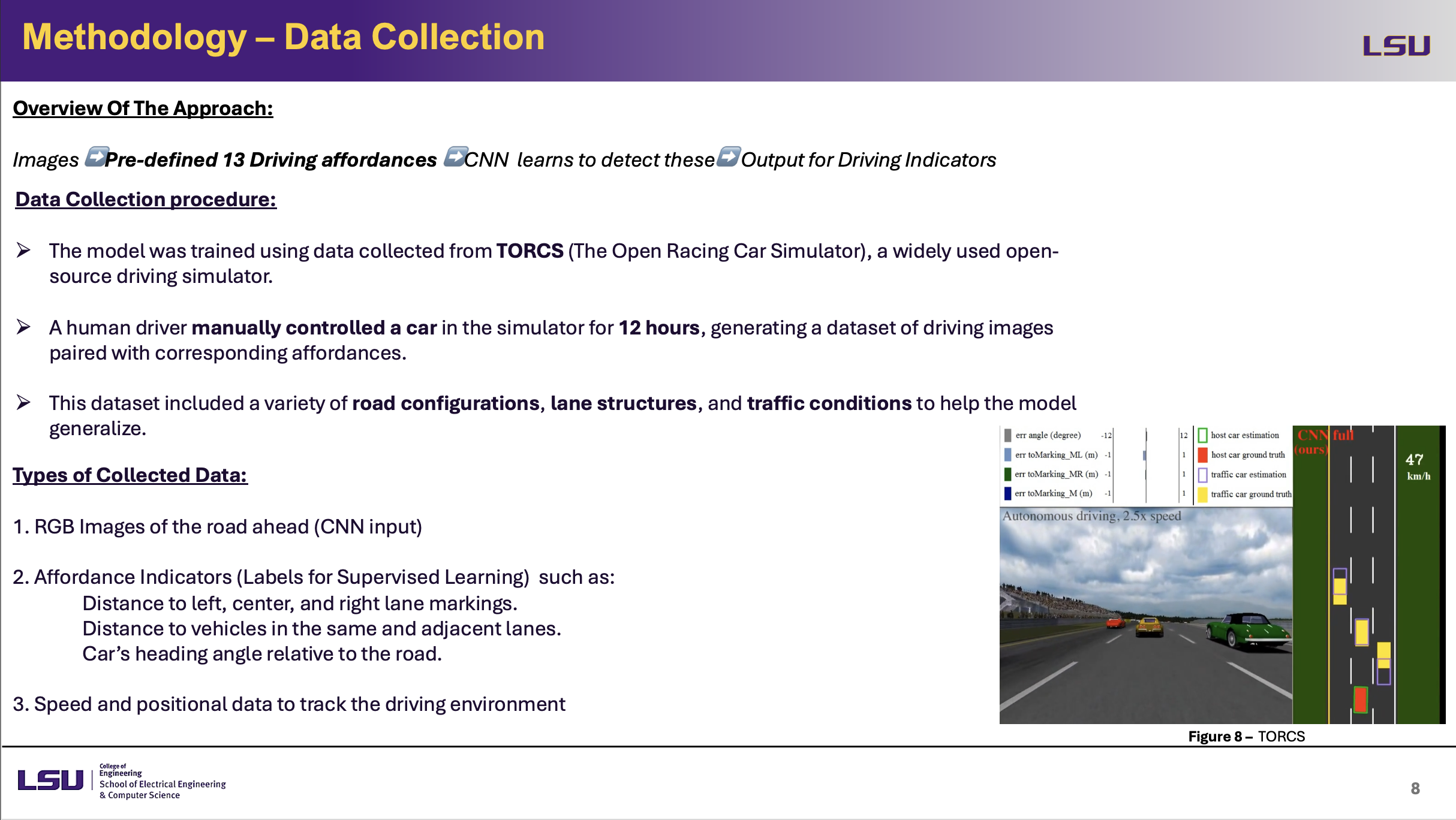

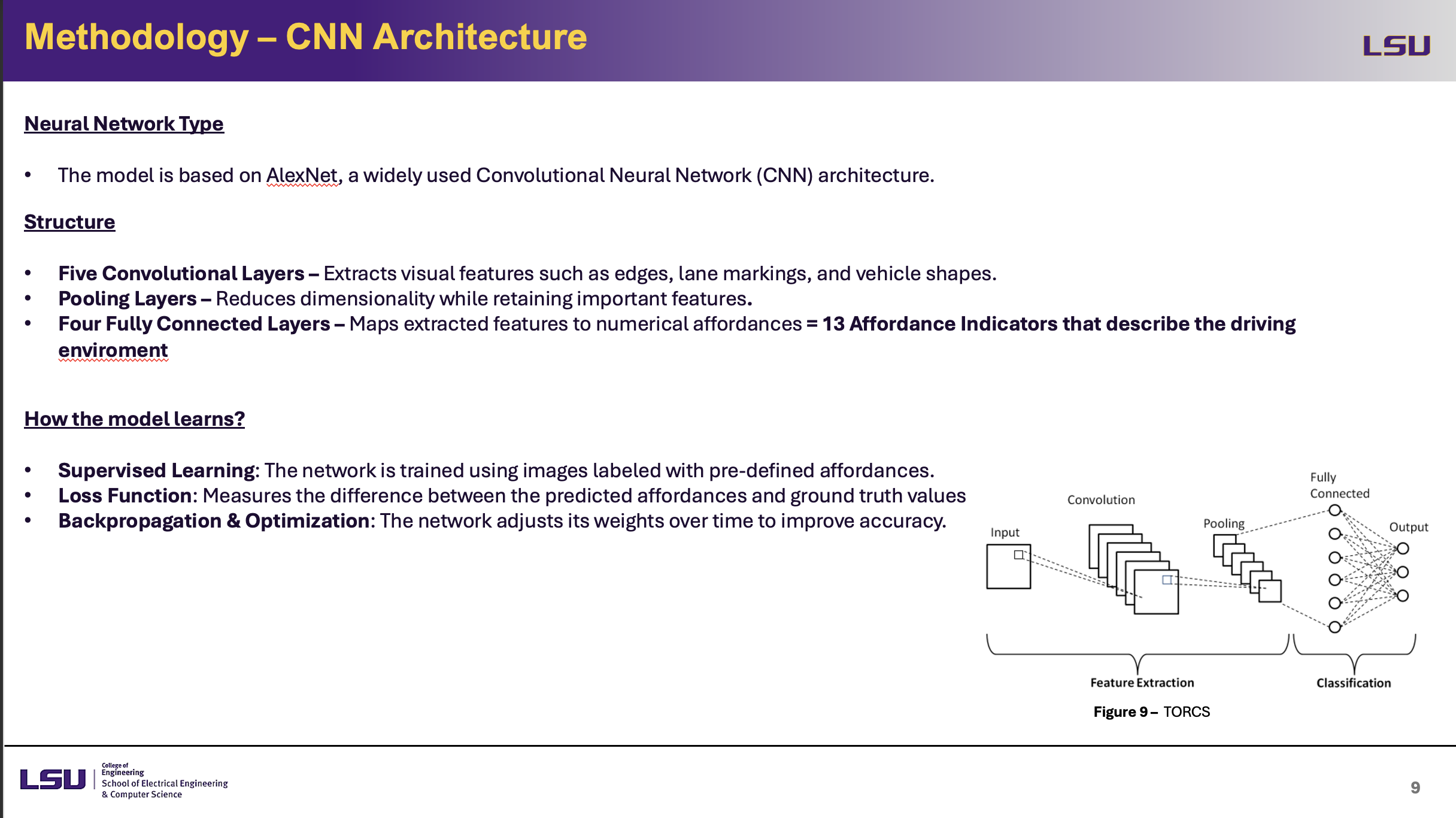

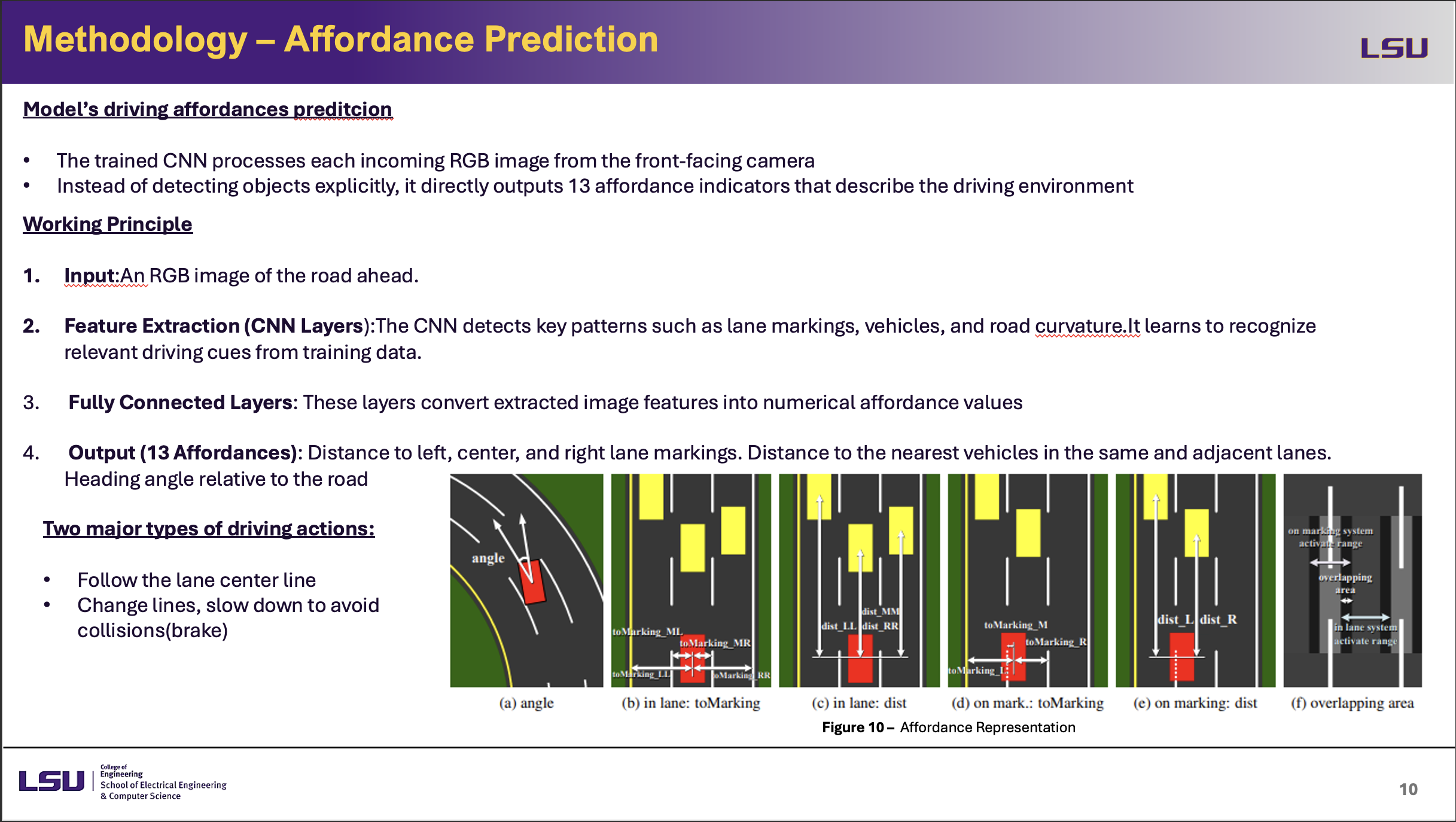

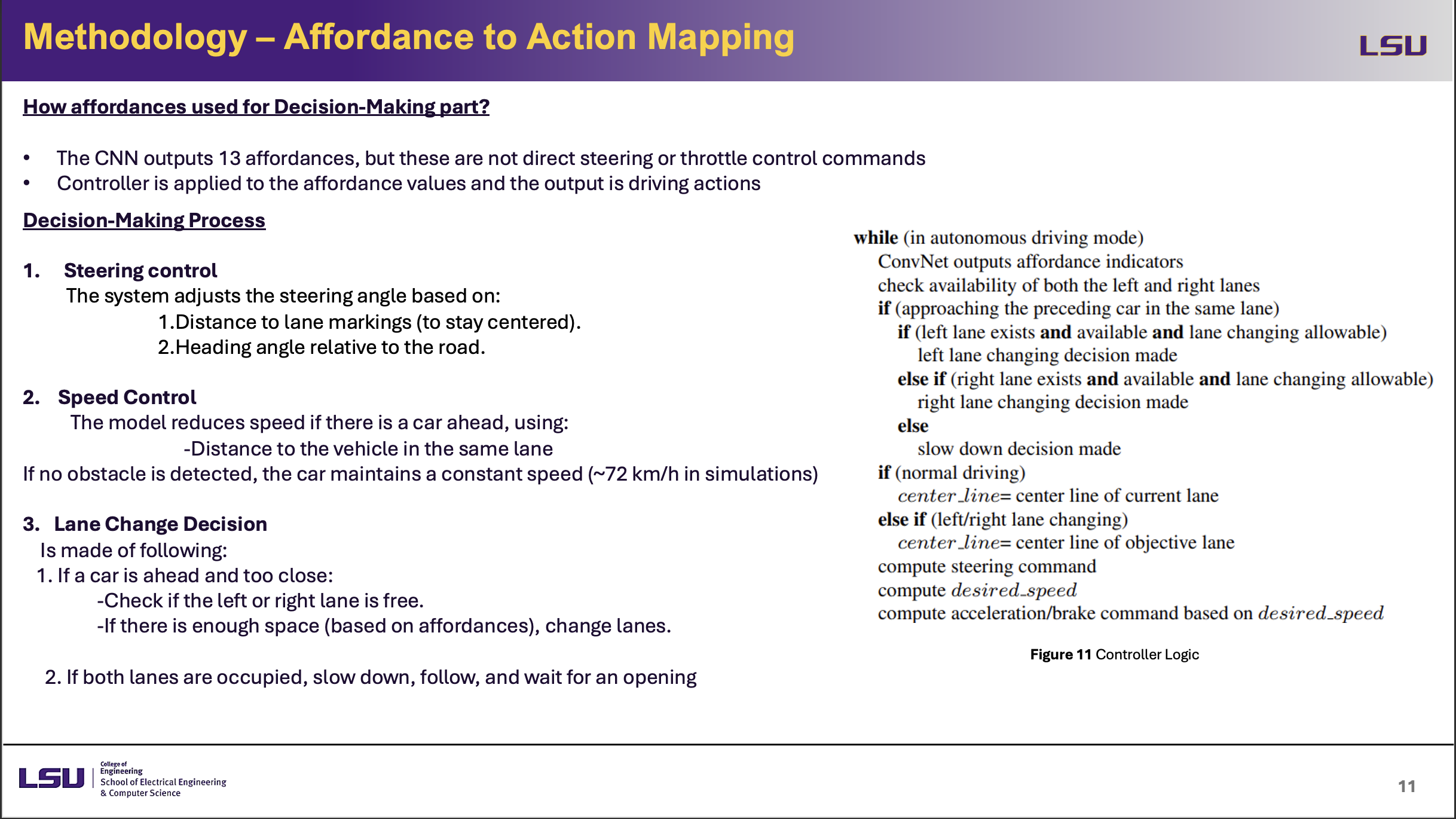

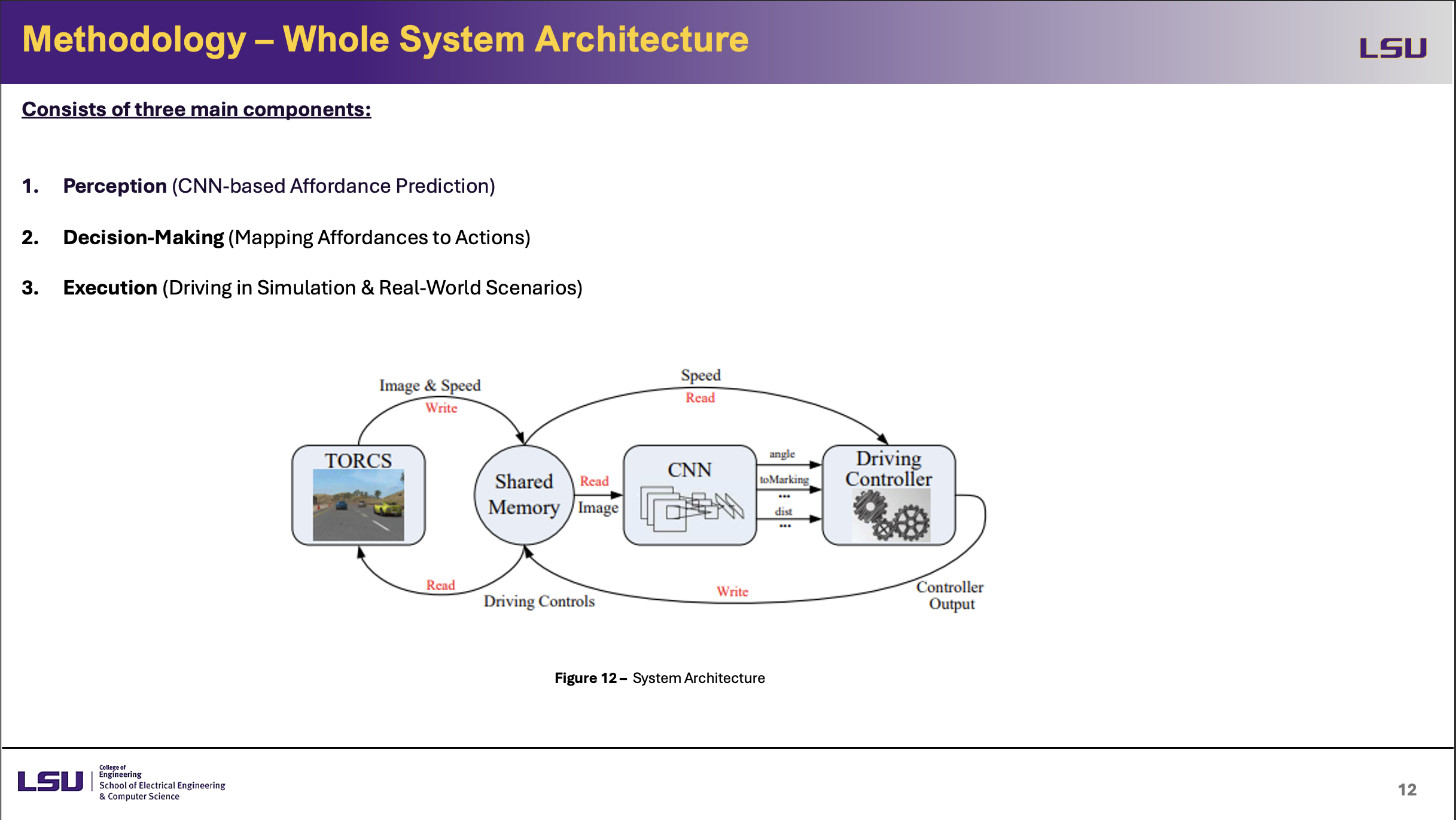

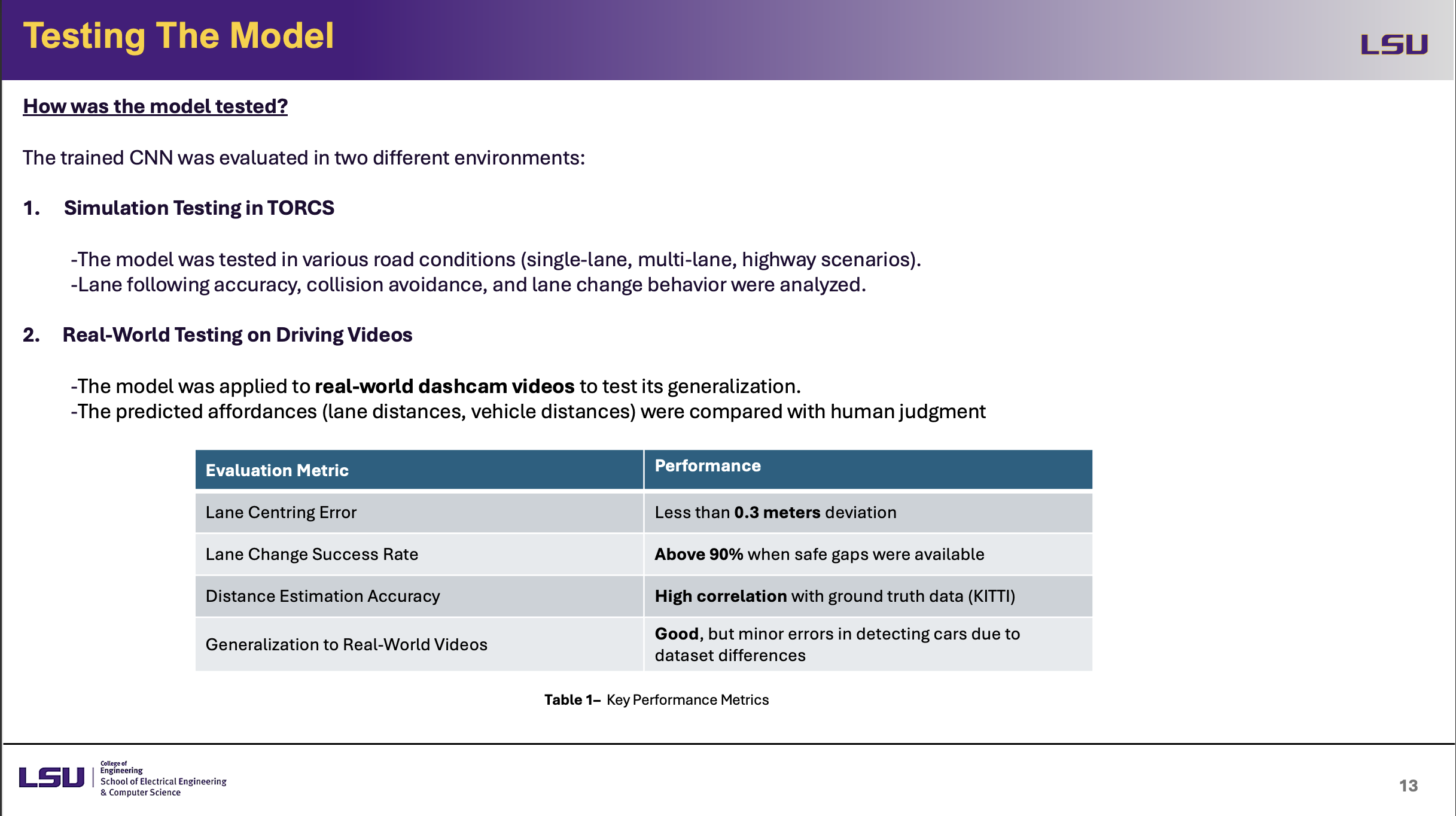

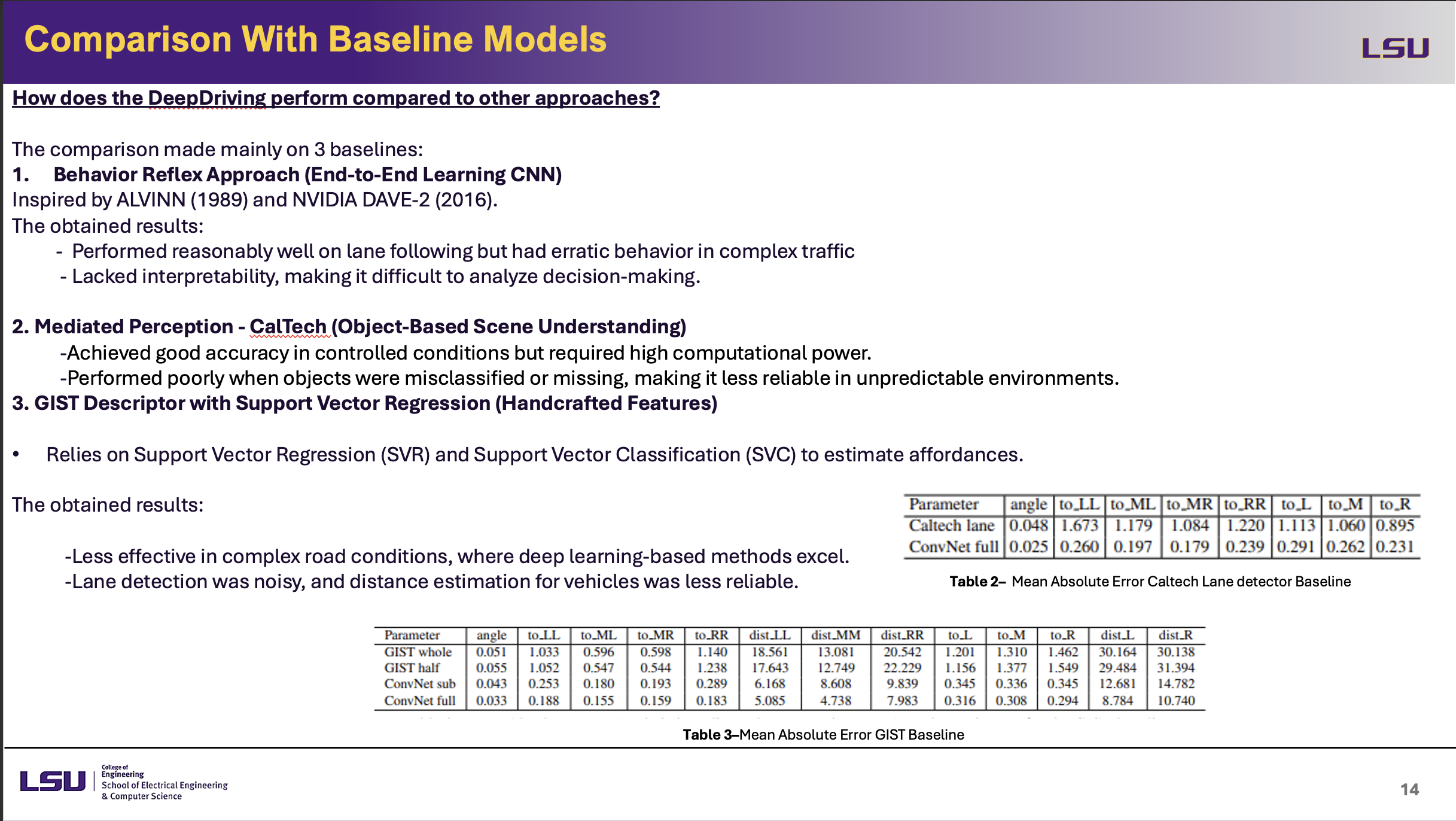

The paper "DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving" introduces a novel approach to autonomous driving by predicting key driving affordances rather than processing the entire scene or mapping images directly to commands. Traditional Mediated Perception methods require complex scene reconstruction, while Behavior Reflex methods lack interpretability. The proposed Direct Perception model extracts 13 key affordances such as lane distances, heading angles, and vehicle distances using a Convolutional Neural Network (CNN). This study highlights how Direct Perception improves efficiency, interpretability, and generalization to real-world driving scenarios by balancing perception-based and reflex-based approaches.

Presentation Breakdown

Discussion and Class Insights

Q1: Can we fully train self-driving cars in Simulation? Do you know any other simulation enviroments?

George: George says he has observed AI was initially trained using games like chess and Go, later evolving to more complex environments. He wonders if a similar approach could apply to self-driving by using real-world Dashcam footage to analyze crash scenarios and develop AI behaviors to avoid them. George suggests that simulation is especially useful for training AI in rare but critical situations, such as crash avoidance. By continuously gathering data from autonomous vehicles and feeding it into simulations, AI could improve decision-making. However, real-world data remains essential, as simulations alone may not capture all unpredictable scenarios. In summary, George believes that while simulations are valuable for refining AI, a hybrid approach combining both simulation and real-world data is necessary for training reliable self-driving cars.

Bassel: Bassel says that while simulation is useful, there might be unexpected scenarios in real driving that simulations can't fully capture. He gives an example of a Tesla accident in Saudi Arabia, where a car crashed into a camel, highlighting how self-driving models may struggle with rare and unpredictable obstacles. This shows the need for real-world testing to improve AI decision-making in diverse environments.

Q2: In this paper, 13 affordances used. What if we define more affordances?

Bassel: Bassel says that the researchers may have already identified the most important affordances, so increasing the number of affordances does not necessarily improve performance. Adding more affordances could increase complexity without significant benefits, and it may lead to unnecessary computational overhead. Instead, focusing on optimizing the existing affordances might be a more effective approach.

Q3: If all cars on the road were Al-driven, would traffic flow better with purely machine-optimized decision-making?

Bassel: Bassel says that if all cars were AI-driven, traffic flow could improve due to optimized decision-making and reduced human errors. However, he also notes that challenges like unpredictable situations, system failures, and ethical concerns must be addressed. He suggests that while AI can enhance efficiency, a fully AI-driven system would require advanced infrastructure and thorough testing to ensure safety.

Audience Questions and Answers

Professor: Could you explain behavioral reflex models? How do they work compared to mediative perception?

Ruslan: Yes, there are key differences between the two. Mediative perception involves perception, localization, planning, and control, meaning it analyzes the entire scene before making a decision. In contrast, behavioral reflex models rely on a single AI agent making decisions. These models are trained by recording human driving behavior for extended periods, such as 12 hours, to learn reflexive responses. For example, if a car stops, the model learns how a human would typically react in that situation.

Professor: What type of input data does the behavioral reflex model use?

Ruslan: The model primarily uses sensor data rather than images. However, raw images are still part of the input, as the model processes visual information alongside sensor readings.

Professor: How does the enabling model record human behavior? How does it work in the learning process?

Ruslan: It’s a combination of both raw images and sensor data. The system records how the car behaves in response to different driving scenarios, capturing patterns in human decision-making. This data is then used to train the AI model, allowing it to mimic human reflexes in similar situations.

Obiora: Have there been real-world implementations of this method in popular self-driving car companies? What test candidates are used for perception and decision-making?

Ruslan: I haven’t encountered real-world examples of this specific method being implemented in commercial self-driving cars. Most of the research is still in the theoretical stage, primarily explored through academic papers. However, there may be more recent studies building on these concepts.

Obiora: Have these methods been applied to self-parking or other driving conditions?

Ruslan: Self-parking is a bit simpler compared to this approach. This method focuses more on decision-making in dynamic driving conditions, such as slowing down when there’s a car ahead, overtaking vehicles, and lane changes. While self-parking systems rely on sensors and predefined movements, this method aims to optimize real-time driving decisions.